| Author | Affiliation |

|---|---|

| Melissa A. Sutherland, PhD, RN | Boston College, William F. Connel School of Nursing, Chestnut Hill, Massachusetts |

| Angela F. Amar, PhD, RN | Emory University, Nell Hodgson Woodruff School of Nursing, Atlanta, Georgia |

| Kathryn Laughon, PhD, RN | University of Virginia School of Nursing, Charlottesville, Virginia |

Introduction Methods Results Discussion Conclusion

ABSTRACT

Introduction: Students aged 16–24 years are at greatest risk for interpersonal violence and the resulting short and long-term health consequences. Electronic survey methodology is well suited for research related to interpersonal violence. Yet methodological questions remain about best practices in using electronic surveys. While researchers often indicate that potential participants receive multiple emails as reminders to complete the survey, little mention is made of the sender of the recruitment email. The purpose of this analysis is to describe the response rates from three violence-focused research studies when the recruitment emails are sent from a campus office, researcher or survey sampling firm.

Methods: Three violence-focused studies were conducted about interpersonal violence among college students in the United States. Seven universities and a survey sampling firm were used to recruit potential participants to complete an electronic survey. The sender of the recruitment emails varied within and across the each of the studies depending on institutional review boards and university protocols.

Results: An overall response rate of 30% was noted for the 3 studies. Universities in which researcher-initiated recruitment emails were used had higher response rates compared to universities where campus officials sent the recruitment emails. Researchers found lower response rates to electronic surveys at Historically Black Colleges or Universities and that other methods were needed to improve response rates.

Conclusion: The sender of recruitment emails for electronic surveys may be an important factor in response rates for violence-focused research. For researchers identification of best practices for survey methodology is needed to promote accurate disclosure and increase response rates.

INTRODUCTION

Electronic surveys are a widely used method of collecting data from large samples in an efficient and timely manner. They are advantageous in younger, more technology-savvy populations, and for collecting data on sensitive topics in a confidential manner.1,2 Research on topics related to interpersonal violence may be facilitated through the use of electronic surveys and the confidentiality and often anonymity they offer to victims and perpetrators. Much of the existing research compares electronic surveys to telephone or face-to-face surveys, reports response rates, and compares responders to non-responders. Yet methodological questions remain regarding other factors (i.e. the sender and subject line of the email communication) that may influence recruitment and response rates.

Limited research explores methodological questions associated with sample recruitment in electronic surveys. In using electronic surveys, researchers must identify the best ways to get potential participants to the survey website and complete the survey. Common strategies include notification via email or postal mail,3–5 publicity campaigns,6 and use of a third-party sampling company7 with much data on timing of contacts.8 As public directories are not available for email addresses, access to email addresses and listservs presents challenges to researchers. Furthermore, based on the sender and subject line, potential participants will make a choice about whether or not to open the email and then in turn respond to the survey. A key consideration involves the sender of the email, which could be a university office, the researcher, or a survey sampling firm. The purpose of this research was to describe the response rates for violence-focused studies using survey methodology. The primary aim was to examine differences in response rates when the electronic survey comes from a campus office, a researcher, or a survey sampling firm. A secondary aim was to explore response rates for electronic and paper survey administration at predominantly minority institutions.

BACKGROUND

Electronic surveys use computers and web-based technology for subjects to participate in research. Electronic surveys have become an increasingly popular method of research as evidenced by the growing literature focused on electronic survey methods. Both on-line capability and equipment available to participants have continued to rise and allow for greater access to electronic surveys.9Electronic surveys have been used by researchers in a variety of fields, including health, policy research, and education. In particular, electronic survey methods have been well suited for studying sensitive behaviors,10,11 including interpersonal violence,3–5 especially among college students.

Benefits and Challenges of Electronic Surveys

Benefits to electronic surveys have been documented and include lower financial resources, shorter response time, researcher control of sample, and efficiency in data entry.9,12,13 Despite these benefits, internet access and response rates issues are documented challenges of electronic surveys.14,15Comparisons reveal that the response rates to electronic surveys can be 11–20% less than those of other survey methods.16,17 In contrast, response rates do not vary significantly between electronic and mail surveys in most college student samples.17

College students are an ideal population for electronic surveys as they are a homogenous group that can be targeted within a known population, allowing for comparison of respondents and the target population on key demographic variables.7 Previous research using electronic surveys on alcohol use and violence with college students suggests that an acceptable return rate for electronic surveys of 30–35%, with studies reporting response rates between 2% and 35%.3,6,8,18,19 Factors related to how participants were contacted and recruited could account for the differences in response rate. While researchers often indicate that participants received multiple reminders to complete the survey, little mention is made of who initiates the contact with potential participants.

College students are affected by the higher rates of interpersonal violence seen among adolescents.20–22Interpersonal violence is often unreported to campus officials and associated with health, social, academic, and lifestyle consequences, which makes the issue a priority area for research investigation.23Several studies on violence have used electronic surveys. In studies on stalking, key differences are seen in response rates. Reyns et al3 report that after receiving an email sent from the university registrar’s office, 13.1% of potential participants completed the survey, while Buhi et al19 report a 35% response rate with no mention of the sender of the recruitment email. Amar et al4 describe an email sent by the teacher’s assistant with no mention of response rate. Finally, in a study on dating violence, Harned5contacted students using a mailed invitation to participate in an electronic survey and got a response rate of 38%.5 In examining this group of studies, the lowest response rate was found when the communication was from someone outside of the study and in a central university role. For scientists focused on violence research, identification of best practices for survey recruitment is needed to increase response rates and improve the quality of the data.

Recruitment Strategies

Methods of contacting potential participants in the research literature include mail recruitment and use of a survey sampling firm. Mail recruitment includes postal mail and email, with a substantial body of research documenting effective practices for postal mail recruitment strategies.8 Less research has examined email recruitment, which is often a mass email sent by either a campus office or the researcher. Email methods of recruitment provide a mechanism to contact eligible participants directly to invite them to participate in the research. The findings on electronic survey response rates vary in the existing violence literature with college students and suggest that the sender of the recruitment may be an important factor.3–5,19

A survey sampling firm can also be used to recruit participants. These companies maintain lists of email addresses of individuals who agree to receive survey invitations. Ramo et al7 reported a firm’s list as an effective way to target eligible participants.7 An advantage is the ability to obtain lists of participants who clearly meet the sample inclusion criteria and who have agreed to complete surveys sent by the firm. Because recipients have theoretically agreed to receive email solicitations from the company, they should be more likely to open the email compared to individuals receiving emails from other databases. Disadvantages include that often the company is paid a fee to distribute the link, for each completed survey or the individuals are paid for completing responses, which can influence the quality of the responses and the costs of the research.7 There is also the potential for subject burnout if they receive too frequent survey requests. Surveys sponsored by academic and governmental agencies have higher response rates than those sponsored by commercial agencies.16

Electronic Surveys and Historically Black Colleges or Universities

Although college students are more likely to respond to electronic surveys compared to the general public, with younger, higher educated, and technologically-aware students having the best response rates,1 these findings are not consistent for all college students. Students who are African American or Hispanic are less likely to respond than those who are white or Asian Americans. Krebs et al24,25conducted 2 large-scale web-based studies on sexual violence involving four Historically Black Colleges or Universities (HBCUs). Their response rates at HBCUs ranged from 15–32% with an average rate of 25%.24 However, in a similar study conducted at 2 large public majority universities the response rate was 42%.25 While neither study discusses the methods used to recruit participants, nor who made the contact with potential participants, the results suggest racial differences exist in electronic survey participation. It is important to examine these differences to determine best practices for recruitment of diverse participants in electronic survey research.

Electronic surveys are an important methodology for collecting data on sensitive topics, such as violence victimization and perpetration. Particularly for young, white, educated, technologically aware students, electronic surveys may be the best methodology to ensure an adequate sample for analysis and representation. While evidence is growing on best practices, methodological questions remain. The literature is lacking on best practices for sending email communication to potential subjects regarding research participation. This research attempts to address the gap in the literature by describing response rates of 3 violence-focused studies in which the sender of the recruitment email varied.

Purpose

The purpose of this analysis is to describe the response rates from 3 violence-focused research studies when recruitment emails are sent from the researcher, a campus office, or a survey sampling firm. A secondary aim was to explore differences in electronic and paper survey administration at predominantly minority institutions.

METHODS

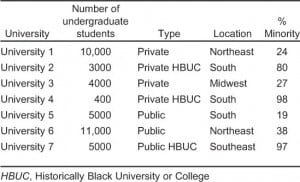

Study 1: Fall 2009. Study 1 used a survey to conduct a study examining female college students’ attitudes and beliefs associated with reporting of interpersonal violence. Data collection occurred at 5 university settings (Table 1). University 1 is a large private, university in the northeast with just under 10,000 undergraduates, of which 24% are minority. University 2 is a private historically black university in the south with 3,000 undergraduate students. Eighty percent of the students are Black/African American students at University 2. University 3 is a medium-sized private college located in the Midwestern with an undergraduate enrollment of about 4,000 students, of which 27% are students of color. University 4 and 5 are located in the south. University 4 is a small, private secular historically black university, and University 5 is a public university with 5,000 undergraduate students and 19% diversity.

Initially, both recruitment and data collection were to be electronic at all 5 universities and all potential participants were contacted by email for study recruitment. Participants at Universities 1 and 3 received an email from the researcher’s email address. At Universities 2, 4, and 5, a campus office sent the emails to the participants. Each participant received an email introducing the study, one containing the link to the electronic survey, and 2 additional reminders to complete the survey/thank you for participating emails.8 To increase participation, respondents had the opportunity to enter a lottery to receive gift cards after completing the survey. Qualtrics, a secure web site, was the web-based program used. After limited success at the 2 HBCUs, Universities 2 and 4, and discussions with campus administrators, the recruitment strategy was adapted. Trained research assistants approached potential participants in campus venues at University 2 and 4 to complete pencil and paper surveys.

Study 2: Fall 2010. Study 2 was designed to use a self-administered survey to conduct a study examining perpetration and victimization among male and female college students. Data for Study 2 was collected from 3 different universities (Table 1). University 1 and University 6 are both located in the northeast; University 1 a private university, with almost 10,000 undergraduate (14.600 with both undergraduate and graduate) students and University 6 a public university with 11,000 undergraduate students and 3,000 graduate students, 38% of whom are minority. University 7, located in the southeast is a public historically black university with 5,000 undergraduate students. As was noted in Study 1, institutional constraints were in a factor in Study 2 as well. Trained research assistants approached potential participants at University 7. The dean of students at University 1 provided researchers with a representative random sample of students’ email addresses and emails were sent to potential participants by the researcher. At University 6, the Office of Student Affairs sent emails to a random sample of potential participants. The emails sent from both University 1 and 6 included a description of the study and a URL link to the electronic survey where participants could complete and submit the survey. Following principles outlined by Dillman8, participants were to receive an introductory email and reminders as described in Study 1.

Study 3: Fall 2012. Study 3 was designed to use an electronic survey method to describe violent and coercive sexual behaviors among a national sample of college men and women. In order to obtain a national sample of college students, a national marketing firm that targets youth for both marketing and research purposes was selected. We selected a firm that reported a national database of over a million youth and could provide gender and racial/ethnic diversity to the sample. The firm we contracted with was the same firm used in the Sexual Victimization of College Women study. 20 The firm was paid to provide email notification regarding the research study to 4,500 college students. The researchers paid a fee to the firm to send the emails (spam free), and additional fees were paid to ensure adequate representations of gender, race/ethnicity, and age. As in our other studies, the firm sent 4 separate emails. The only difference in methods was that the emails came from the survey sampling firm. The first email notified participants about the upcoming email survey and the other emails were reminders to complete the survey. A link to the survey was included in 3 of the emails. No fees were charged for each completed survey nor were participants paid for their responses. For consistency purposes, the same email subject line (Subject) was used in all three studies.

Measures

All three studies measured victimization, perpetration, or both victimization and perpetration of interpersonal violence using reliable and valid instruments. Study 1 used measures of Theory of Planned Behavior,26,27 the Partner Abuse Scale,28,29 and the Abuse Assessment Screen.30 Items related to stalking were also measured in Study 1. Study 2 and Study 3 used the Sexual Experiences Survey (SES) Victimization and Perpetration Version31,32 to measure sexual perpetration and/or victimization. Past victimization was measured by the Sexual and Physical Abuse History Questionnaire.33 As in other violence research, sexual victimization/perpetration items were placed at the end of a broader survey focused on general health and relationships. Participants were also asked about demographic information, alcohol behaviors, disordered eating, and history of victimization. For all 3 of the studies, the surveys were pre-tested with 10–15 diverse undergraduate students, checking for flow of the survey and time for completion. For all 3 of the studies discussed in this paper, completion time of the survey was approximately 30–40 minutes.

Human Subjects Protection

The institutional review boards at the participating universities approved each study. Due to the topic of the studies, researchers and the institutional review boards ensured that efforts for recruitment were appropriate. The consent informed participants that they could decline to answer any question or stop the survey at any time. The online survey had an ‘‘Exit Survey” button on each page of the survey. Each participant received information about the risks and benefits, purpose of the study, and confidentiality. A list of resources (national and local) related to violence and traumawas provided to all participants. Per the institutional review boards’ request, if participants exited the online survey before completing, the list of resources was provided. For the study 3 only, national resources were provided but students were also encouraged to contact college health or student services are their specific university/college.

RESULTS

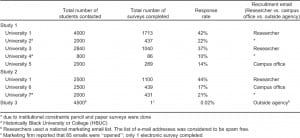

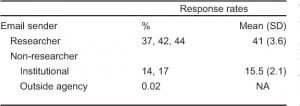

For study 1, 11,640 students were contacted, resulting in 3,565 completed surveys (Tables 2 and and3).3). The overall response rate for Study 1 was 30.6%. In examining the individual universities, 4,000 students at University 1 were sent a recruitment email from the researcher and the study had a response rate of 42%. University 2 and 4 (HBCUs) used both electronic and pen/paper methods and response rates of 22% and 10%, respectively. Study 2 involved 3 universities with 7,000 students contacted for participation. Of the 7,000 students contacted, 1,970 completed a survey (response rate 28%). At University 1, potential participants (n = 2,500) were contacted by the researcher and 1,100 students completed a survey (response rate of 44%). In contrast, at University 6 potential participants (n = 2,500) were contacted through an email sent by a campus office, and resulted in 439 completed surveys (17%). Finally University 7, HBCUs used only pencil and paper surveys, which had a response rate of 21%. For Study 3, a survey-sampling firm was used for recruitment. The firm sent emails to 4,500 college aged students inviting them to participate in a survey. Of the 4,500 students contacted, 85 “opened” the email with 1 (< 0.01) student completing the survey.

Secondary Aim

In Study 1, electronic recruitment was attempted at Universities, 2 and 4, both HBCUs. The initial emails at both universities were sent from the Dean of Students Office email address. At University 2, email recruitment yielded 79 surveys (4%) as compared to pen and paper recruitment of 358 surveys (18%). At University 4, email recruitment yielded 6 surveys (< 1%) as compared to 10% using pen and paper surveys (n=80). Email recruitment consisted of 4 emails over a 2-week period. After discussions with Student Affairs at both campuses, the decision was made to collect pen and paper surveys. In the recruitment process, trained research assistants advised participants to complete only one survey.

In Study 2, in the early meetings, personnel at University 7 advised the research team that electronic surveys were not successful in recruitment for previous studies. Based on the team’s experience, the decision was made to only collect data using pen and paper surveys.

DISCUSSION

The findings of this analysis suggest that the sender of the recruitment email for electronic surveys (i.e. the survey invitation) may have a role in response rates. It could influence the recipient’s decision to open the email invitation, as well as their decision to respond by completing the survey. Our findings suggest that the researcher-initiated recruitment email is a more successful method of recruiting participants than a recruitment email sent by campus officials (Table 3). The higher rate for researcher-initiated recruitment emails is consistent with the limited research available in this area.19 In our analysis, recruitment emails sent by a campus office had lower response rates. This is consistent with previous research.3 However, most research studies using electronic surveys provide no mention of the sender of the emails.

Several factors could account for the differences in response rates. Email is a fast-growing form of communication meaning that people receive large numbers of messages daily. Decisions are made based on priority assigned to an email. Often for college students, campus offices such as Deans of Students and Academic Divisions send out multiple emails on a wide range of topics that may or may not pertain to most students. This could suggest that students could become less sensitive and may not open all emails received from these individuals or offices. For students who do open these emails, the perceived threat to confidentiality could be a factor. The text of the message was from the researcher but the return address was from an administrative office. Having a campus official associated with the study could produce concern about the potential sharing of findings, despite information to the contrary in the email describing the study.

On the other hand, an email from an unknown or unrecognized sender may pique the curiosity of the recipient to open it. An email sent from an individual on the same campus may also bring a sense of closeness and not feel like an email sent by an outsider. Further, the name of the individual may be recognizable to students and could also prompt students to open the email and respond to the survey. The threats to confidentiality may not be associated with an email from, or study participation with a researcher on the campus. For individuals at another university, receiving an email from someone at another university with a name@university.edu address may also pique the potential participant’s curiosity; making it more likely they will open the email and then participate in the research. The university email address may connote a level of importance to the request.

Paper surveys at HBCUs had the next highest response rates. This is consistent with findings from a meta-analysis concluding that paper surveys were superior to email surveys.17 Paper surveys are typically distributed as correspondence from the researcher. For example, the return address of the researcher is on the envelopes or the survey responses are collected in person from a member of the research team. This would not produce confusion about who would receive the data.

Finally, the lowest rates were seen from the use of a survey-sampling firm. While only one attempt was made at using the sampling firm, the results were abysmal. This is not consistent with other research using sampling firms for recruitment. Ramo et al7, in their study comparing 3 recruitment methods, reported that the survey sampling firm had the highest recruitment compared to the other 2 methods (internet advertisement and Craigslist). However, these researchers did not provide the total number of potential participants contacted by the firm or a response rate. Furthermore, Ramo et al7 also paid a fee for each completed survey, which does create additional costs. Similar to Study 3, the sender of the email invitation was a survey-sampling firm, which recruits and maintains a list of potential survey respondents. Presumably because potential survey respondents have agreed to be contacted by researchers or marketers, individuals should open emails sent through the firm and complete the survey. This was not the situation in our research, where few participants opened the email invitation and even fewer completed the survey. Possible explanations include that individuals may develop burn out with the survey-sampling firm and the receipt of emails. It is also possible that these mass emails are caught in the spam filters of recipients, although the firm used in Study 3 ensured spam-free email addresses. As only one attempt was made, further research is needed on this method of sample recruitment.

The findings also suggest that pen and paper surveys are a more effective way to complete research at HBCUs rather than with electronic surveys. Our findings were similar to the work of Krebs et al24,25where more robust response rates were associated with pen and paper survey administration than with electronic surveys. A systematic review of factors affecting response rates to web-based surveys found that African Americans were less likely to participate.1 Reports from the campus administrators at the 3 campuses used in this study reported that the low turnout was because the campus internet servers were not strong and prone to disruptions. Further, while many college-aged individuals have internet access on their phones or other devices, these devices may not remove this barrier. Survey completion on these devices can be more difficult due to the small screen size and differences in browser capabilities on these devices vs. computers. However, African Americans in general have lower participation rates in health research than other racial/ethnic groups, and greater effort must be made to identify a number of options, which improve minority participation in research.34 More research is needed to elucidate factors that enable higher response rates from African American participants.

LIMITATIONS

The findings present a beginning description of differences in response rates to electronic surveys based on who contacts the subjects for study recruitment. The findings are limited by the use of 3 studies at 7 universities from one research team. Due to the smaller number of universities, we were unable to determine the statistical significance of the different response rates. An examination of the methods and response rates shows higher rates with researcher-sent emails and lower rates with institution-sent emails. However, with only 7 observations, we did not have adequate power to conduct any meaningful statistical analysis. The survey sampling firm had the lowest rates. However, with only one observation, we are unable to draw conclusions. The findings do suggest that differences existed depending on how individuals were contacted; however, more research is needed to fully understand the relationship of sender to response rates in electronic surveys. One consistent factor was that the same subject line was used in all 3 studies. Different campus factors could have influenced the response rates. For example, while the public campuses we used all wanted to send the emails to the participants, the two private HBCUs also wanted to send the emails. One public university was in the Northeast and the other was in the Southern U.S. Future research could explore institution-specific variations in response rates to electronic surveys. Given the limited research on this important topic; however, these findings offer some insight into mechanisms for improving response rates to electronic surveys and a rationale for considering paper and pencil surveys in some cases. Research with students can uncover factors that prompt them to participate in research and to open emails regarding research.

CONCLUSION

In conclusion, this analysis represents a preliminary step toward understanding the importance of the email sender in electronic survey response rates. Our analysis found that recruitment emails sent by researchers had better response rates as compared to recruitment emails sent from campus officials. Future research is needed to understand the influence of the sender of recruitment emails in electronic surveys. College students are at highest risk for interpersonal violence and the need for quality data is critical. For scientists focused on violence research in this population, identification of best practices for survey methodology will promote accurate disclosure, increase response rates, and ensure data quality.

Footnotes

Supervising Section Editor: Monica H. Swahn, PhD, MPH

Submission history: Submitted December 14, 2012; Revision received February 15, 2013; Accepted February 26, 2013

Full text available through open access at http://escholarship.org/uc/uciem_westjem

DOI: 10.5811/westjem.2013.2.15676

Address for Correspondence: Melissa A. Sutherland, PhD, RN. Boston College, Connell School of Nursing, Cushing Hall, 140 Commonwealth Avenue, Chestnut Hill, MA 02467. Email: melissa.sutherland@bc.edu.

Conflicts of Interest: By the WestJEM article submission agreement, all authors are required to disclose all affiliations, funding sources, and financial or management relationships that could be perceived as potential sources of bias. The authors disclosed none.

REFERENCES

1. Fan W, Yan Z. Factors affecting response rates of the web survey: A systematic review. Computers in Human Behavior. 2010;26:132–139.

2. Couper M, Conrad F, Tourangeau T. Visual context effects in web surveys. Public Opinion Quarterly.2007;71:623–634.

3. Reyns BW, Henson B, Fisher BS. Stalking in the twilight sone: Extent of cyberstalking in victimization and offending among college students. Deviant Behav. 2012;33:1–25.

4. Amar AF, Alexy EM. Coping with stalking. Issues in Mental Health Nursing. 2010;31:8–14.[PubMed]

5. Harned MS. Abused women or abused men? An examination of the context and outcomes of dating violence. Violence Vict. 2011;16:269–285. [PubMed]

6. DuRant R, Champion H, Wolfson M. et al. Date fighting experiences among college students: Are they associated with other health-risk behaviors? J Am Coll Health. 2007;55:291–296. [PubMed]

7. Ramo DE, Hall SM, Prochaska JJ. Reaching young adult smokers through the Internet: Comparison of three recruitment mechanisms. Nicotine Tob Res. 2010;12:768–775. [PMC free article] [PubMed]

8. Dillman DA, Smyth JD, Christian LM, editors. Internet, mail, and mixed-mail surveys: the tailored design method. Hoboken, NJ: Wiley; 2009.

9. Dillman D, editor. Mail and internet surveys: The tailored design method. Hoboken, NJ: Wiley; 2007.

10. Reddy MK, Fleming MT, Howells NL. et al. Effects of method on participants and disclosure rates in research on sensitive topics. Violence Vict. 2006;21(4):499–506. [PubMed]

11. Knapp H, Kirk SA. Using pencil and paper, Internet and touch-tone phones for self-administered surveys: Does methodology matter? Computers in Human Behavior. 2003;19:117–134.

12. Lefever S, Dal M, Matthiasdottir A. Online data collection in academic research: Advantages and limitations. British Journal of Educational Technology. 2007;38:574–582.

13. Tourangeau R. Survey research and societal change. Annu Rev Psychol. 2004;55:775–801.[PubMed]

14. Couper MP. Web surveys: A review of issues and approaches. Public Opinion Quarterly.2000;64:464–494. [PubMed]

15. Fricker RD, Schonlau M. Advantages and Disadvantages of Internet Research Surveys: Evidence from the Literature. Field Methods. 2002;14:347–367.

16. Manfreda KL, Bosnjak M, Berzelak J. et al. Web surveys versus other survey modes. A meta-analysis comparing response rates. Journal of the Market Research Society. 2008;50:79.

17. Shih TH, Fan X. Comparing response rates in e-mail and paper surveys. A meta-analysis.Educational Research Review. 2009;4:26–40.

18. Benfield JA, Szlemko WJ. Internet-based data collection: Promises and realities. Journal of Research Practice. 2006;2:D1.

19. Buhi ER, Clayton H, Surrency HH. Stalking victimization among college women and subsequent help-seeking behaviors. J Am Coll Health. 2009;57:419–426. [PubMed]

20. Fisher BS, Cullen FT, Turner MG. The sexual vicitmization of college women. Washington, D.C.: National Institute of Justice; 2000.

21. Fisher BS, Cullen FT, Turner MG. Reporting sexual victimization to the police and others: results from a national-level study of college women. Criminal Justice Behav. 2003;30:6–38.

22. Black MC, Basile KC, Breiding MJ. The National Intimate Partner and Sexual Violence Survey (NISVS): 2010 Summary Report. Atlanta: p. GA2011.

23. Tjaden P, Thoennes N. Full report of the prevalence, incidence, and consequences of violence against women. Washington: US Department of Justice; 2000. NCJ 183781.

24. Krebs CP, Lindquist CH, Barrick KB. The historically Black College and University Campus sexual assault (HBCU-CSA) study. Department of Justice (US); 2010.

25. Krebs CP, Lindquist CH, Warner TD. The campus sexual assault (CSA) study: Final Report. 2007.

26. Ajzen I. Attitudes, personality and behavior. 2nd ed. New York: Open University Press; 2005.

27. Fishbein M, Ajzen I. Belief, attitude, intention and behavior: An introduction to theory and research. Reading, MA: Addison-Wesley; 1975.

28. Attala JM, Hudson WW, McSweeney M. A partial validation of two short-form Partner Abuse Scales. Women Health. 1994;21(2–3):125–139. [PubMed]

29. Hudson WW, MacNeil G, Dierks J. Six new assessment scales: a partial validation. Tempe, AZ: Walmyr Publishing Company; 1995.

30. Soeken K, McFarlane J, Parker B. Campbell J, editor. The Abuse Assessment Screen: A clinical instrument to measure frequency, severity, and perpetrator of abuse against women. Empowering Survivors of Abuse: Health Care for Battered Women and their Children. 1998.

31. Koss MP, Abbey A, Campbell R. The sexual experiences short form victimization (SES-SFV) Tucson, AZ: University of Arizona; 2006.

32. Koss MP, Abbey A, Campbell R. The sexual experiences short form victimization (SES-SFP) Tucson, AZ.: University of Arizona; 2006.

33. Leserman J, Drossman DA, L.Z. The reliability and validity of a sexual and physical abuse history questionnaire in female patients with gastrointestinal disorders. Behav Med. 1995;21:141–150.[PubMed]

34. UyBico SJ, Pavel S, Gross CP. Recruiting vulnerable populations into research: A systematic review of recruitment interventions. J Gen Intern Med. 2007;22:852–863. [PMC free article] [PubMed]