| Author | Affiliation |

| Nicholas Nacca, MD | SUNY Upstate Medical University, Department of Emergency Medicine, Syracuse, New York |

| Jordan Holliday | SUNY Upstate Medical University, Department of Emergency Medicine, Syracuse, New York |

| Paul Y. Ko, MD | SUNY Upstate Medical University, Department of Emergency Medicine, Syracuse, New York |

Introduction

Methods

Results

Discussion

Limitations

Conclusion

ABSTRACT

Introduction

Mounting evidence suggests that high-fidelity mannequin-based (HFMBS) and computer-based simulation are useful adjunctive educational tools for advanced cardiac life support (ACLS) instruction. We sought to determine whether access to a supplemental, online computer-based ACLS simulator would improve students’ performance on a standardized Mega Code using high-fidelity mannequin based simulation (HFMBS).

Methods

Sixty-five third-year medical students were randomized. Intervention group subjects (n = 29) each received a two-week access code to the online ACLS simulator, whereas the control group subjects (n = 36) did not. Primary outcome measures included students’ time to initiate chest compressions, defibrillate ventricular fibrillation, and pace symptomatic bradycardia. Secondary outcome measures included students’ subjective self-assessment of ACLS knowledge and confidence.

Results

Students with access to the online simulator on average defibrillated ventricular fibrillation in 112 seconds, whereas those without defibrillated in 149.9 seconds, an average of 38 seconds faster [p<.05]. Similarly, those with access to the simulator paced symptomatic bradycardia on average in 95.14 seconds whereas those without access paced on average 154.9 seconds a difference of 59.81 seconds [p<.05]. On a subjective 5-point scale, there was no difference in self-assessment of ACLS knowledge between the control (mean 3.3) versus intervention (mean 3.1) [p-value =.21]. Despite having outperformed the control group subjects in the standardized Mega Code test scenario, the intervention group felt less confident on a 5-point scale (mean 2.5) than the control group. (mean 3.2) [p<.05].

Conclusion

The reduction in time to defibrillate ventricular fibrillation and to pace symptomatic bradycardia among the intervention group subjects suggests that the online computer-based ACLS simulator is an effective adjunctive ACLS instructional tool.

INTRODUCTION

Simulation has advanced rapidly over the past decade with more innovative and realistic tools being incorporated to educate healthcare providers on a daily basis.1-4 The body of literature investigating the effectiveness of medical simulation has struggled to keep pace with the developments in simulation technology. Many authors have called for quality research regarding the use of simulation in medical education.5-8

Advanced cardiac life support (ACLS) is a collection of skills and interventions intended to direct healthcare providers during treatment of cardiac arrest and other life-threatening emergencies. Currently, many medical schools do not require ACLS certification for graduation.9,10 Many medical school graduates feel underprepared and lack the confidence and skills to effectively participate in resuscitative efforts as interns beginning their residency programs.10-14

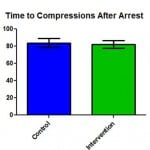

Figure 1. Students with access to computer based simulation did not initiate compressions in the ACLS Megacode faster than students without access.

ACLS, advanced cardiac life support

The necessity for high-quality education of ACLS at the medical student level cannot be overstated. The integration of high-fidelity mannequin-based simulation (HFMBS) into existing ACLS curricula as a supplemental instructional tool has been advocated.10,15-21 A recent study of medical students using HFMBS in ACLS training showed significantly improved knowledge and psychomotor skills.22 The costs associated with acquiring and maintaining an HFMB simulator may be impeding its use in many ACLS training programs.3,10,23

The department of emergency medicine at the State University of New York (SUNY) Upstate Medical University uses two different ACLS curricula to train approximately 650 healthcare providers annually. The first is a traditional two-day American Heart Association (AHA) ACLS course, which uses didactic lectures, AHA-approved instructional videos, and low-fidelity simulation in small-group sessions.10 The second is a three-day HFMBS course, which is used to teach third-year medical students ACLS during their two-week internal medicine rotation. Third-year medical students enrolled in the HFMBS course demonstrated higher proficiency in learning ACLS than students enrolled in the traditional course.10 This is consistent with previous studies, which revealed improved educational outcomes, retention of ACLS skills and knowledge, and adherence to ACLS guidelines.24-26

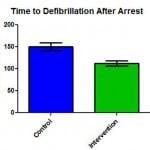

Figure 2. Students with access to computer based simulation defibrillated Ventricular Fibrillation in the ACLS Megacode 38 seconds faster than students without access [t(63=3.36, p<0.5].

ACLS, advanced cardiac life support

The majority of the early literature on HFMBS as an educational tool for training healthcare providers stemmed from residency programs, and there was little data on the use of simulation at the medical student level.27 Not until recently have educators studied the utility of HFMBS at the undergraduate medical education level.10,27-29 In an effort to further increase student proficiency in ACLS, we noted reports of ACLS-specific computer-based simulators dating back to 1991.30,31 Advances in computer technology have facilitated the development of many high-quality computer-based simulation programs that are efficacious instruction tools.13,23,32 A more recent study suggested that a computer-based simulation program improved retention of ACLS guidelines as a standalone teaching tool.33 We hypothesized that a computer-based simulation program used as an adjunct to HFMBS ACLS training would result in improved ACLS proficiency.

METHODS

At SUNY Upstate, each third-year emergency medicine resident serves as a certified AHA ACLS instructor during their administrative month. They train groups of 4-6 third-year medical students in ACLS using HFMBS. During their two-week rotation, the medical students undergo three days of HFMBS ACLS training, which consists of an introduction to ACLS material using partial task trainers, followed by simulated management of five ACLS scenarios including ventricular fibrillation, ventricular tachycardia, symptomatic bradycardia, pulseless electrical activity, and asystole. The details of this curriculum have been previously published.10

At the conclusion of the course students were assessed by running a “Mega Code” on the high-fidelity mannequin simulator (SimMan®, Laerdal Medical Corporation, Wappingers Falls, NY, USA). The “Mega Code” scenario consisted of a 40-year-old male patient complaining of chest pain who becomes unresponsive after 40 seconds of conversation. The student must first recognize and treat ventricular fibrillation using the appropriate electrical and pharmacologic interventions, in addition to directing team members on proper cardiopulmonary resuscitation. After the third defibrillation attempt, the patient converts to symptomatic sinus bradycardia, which the student must recognize and treat appropriately. Failure to initiate and carry out the proper interventions would result in simulated patient death.

A novel, online, computer-based ACLS simulator (SimCode ACLSTM,Transcension Healthcare LLC) teaches the user to direct nurses, diagnose, and appropriately treat ACLS cases. Student accounts can be monitored by a manager account, with student use and performance assessed and trended over time. Transcension Healthcare LLC, provided student accounts for participants of this study.

Groups of third-year medical students were randomized. Both groups underwent a two-week ACLS course taught by a third-year emergency medicine resident, which used HFMBS. The intervention group (n=29) each received a two-week access code to the online ACLS simulator as a supplemental resource, whereas the control group (n=36) did not. A run’s test was performed to confirm true randomization. The students in the intervention group were instructed to complete the computer-based tutorial on the first day of their ACLS instruction. They were then asked to complete the online ventricular tachycardia and symptomatic bradycardia cases during their two-week ACLS course. We used the manager account feature to verify qualitative usage of the computer-based ACLS simulator for students in the intervention group. On the last day of the ACLS course, informed consent was obtained from both groups prior to any data collection.

Each student’s simulated “Mega Code” was recorded using audio, video, and Laerdal recording software. Prior to the assessment, students were asked to rate their subjective knowledge and confidence of ACLS material and practice. Following the “Mega Code” assessment, students were again asked to rate their subjective knowledge and confidence. Students in the intervention group were asked various additional questions regarding their use of the computer-based ACLS simulation program.

Data collected by review of video and software recording included the time to initiate chest compressions during cardiopulmonary resuscitation and time to defibrillation, which were calculated for each student from the beginning of the simulation. The time to pace symptomatic bradycardia was calculated from the onset of the rhythm change. We used these times as our primary outcome measures. The post mega code subjective knowledge and confidence surveys were used as secondary outcome measures. We entered the collected data into spreadsheet format, and we used a statistical software package (GraphPad Prism Version 4.0®, GraphPad Software, Inc., San Diego, CA, USA) to run a Student’s t-test for each of the variables. The SUNY Upstate Medical University Institutional Review Board granted exemption status for this study.

RESULTS

We obtained complete data from the recorded “Mega Code” and surveys of 65 third-year medical students. During the study period four groups (three assigned to intervention and one to control) were not consented and therefore data was not collected. This accounted for approximately 24 missed participants. An additional two groups (one assigned to control and one to intervention) were consented, but video recording was unavailable. This accounted for seven consented students that were excluded from analysis for lack of data. Only one student in the intervention group refused participation. Ten students assigned to the intervention group never accessed the online simulator and thus were excluded from analysis.

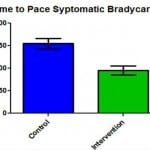

Figure 3. Students with access to computer based simulation paced Symptomatic Bradycardia in the ACLS Megacode 60 seconds faster than students without access [t(63)=3.71, p<0.5].

ACLS, advanced cardiac life support.

Of the 65 participants with complete data (36 controls and 29 Intervention), there was not a statistically significant difference between the time required to initiate chest compressions on the unresponsive, pulseless high-fidelity mannequin in the control versus the intervention group (Figure 1). A statistically significant difference was noted between the two groups in time to defibrillate ventricular fibrillation, where those students who had used the computer-based simulator defibrillated on average in 112 seconds versus those without access defibrillated on average in 149.9 seconds, on average 38 seconds faster. [t(63)=3.36, p<.05] (Figure 2). Additionally, students in the intervention group paced symptomatic bradycardia in an average time of 95.14 seconds versus the average time of 154.9 seconds in the control group. Thus, the intervention group was on average 60 seconds faster than the control, which was statistically significant [t(63)=3.71, p<.05] (Figure 3).

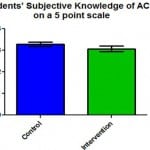

Figure 4. Students with access to computer based simulation did not assess their knowledge of ACLS guidelines differently than those without access.

ACLS, advanced cardiac life support

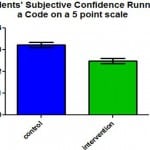

Figure 5. Students with access to computer based simulation had significantly lower subjective confidence in running a code than those without access [t(63)=4.19, p<.05].

There was not a statistically significant difference between students’ perceived ACLS knowledge following the Mega Code assessment between the control and intervention groups (Figure 4); however, analysis of the students’ subjective confidence following the Mega Code assessment revealed a statistically significant difference [t(63)=4.19, p<.05], where students in the intervention group reported lower confidence levels on a 5-point scale (Figure 5). Students in the intervention group provided many positive subjective responses indicating satisfaction with the computer-based simulation program. Sixty-six percent of students reporting that the program “Very Much Improved” or “Extremely Improved” their ability to effectively manage an ACLS code. Additionally, 34% reported that they would “Definitely” and 62% reported that they would “Probably” use the online, computer-based simulator in the future.

DISCUSSION

The data demonstrated that the addition of an online computer-based simulation program to an HFMB ACLS training program did not influence the time to initiate chest compressions in a simulated scenario requiring cardiopulmonary resuscitation. It did, however, reduce the time to defibrillate ventricular fibrillation and pace symptomatic bradycardia. Though the primary outcome measurements have not been systematically validated to reflect improved ACLS performance, the authors interpret these as valuable indicators of comfort and fluency in ACLS protocols.

Perhaps the most interesting finding was that, despite outperforming students in the control group, students in the intervention group reported feeling less confident. Additionally, the students in the intervention group did not rate their knowledge level higher than did the students in the control group. These findings were unexpected and in stark contrast to much of the available literature that discusses participants’ self-confidence following an educational intervention.10,24,34 A proposed explanation for this finding is that the online, computer-based simulation program exposed students in the intervention group to many ACLS simulation cases in excess of the five HFMB simulation scenarios in which both groups participated. Due to the structure of the online computer-based simulation program, these additional simulation cases could not have been completely ignored by students in the intervention group, even thought they were only tasked with completing the ventricular fibrillation and symptomatic bradycardia cases. The exposure to additional cases may have added to the complexity of the material and contributed to the lower subjective confidence ratings despite superior performance on the primary outcome measures.

Managing resuscitations and other medical crises, whether simulated or real, elicits emotional stress and anxiety as individual and team performance may be compromised.35,36 The stress and anxiety encountered during such situations may enhance memory encoding, consolidation, and retrieval, thereby improving retention.19,37,38 The reported effects of the stress and anxiety induced during participation in simulations are contrasting, with a group of anesthesia trainees reporting enhanced learning and clinical experience and a group of nursing students reporting hindered learning.19,39,40 Anxiety is characterized as demotivating and detrimental to perceived self-efficacy within the psychological literature.41,42 We did not include a real-time measure of student anxiety in this study. It could be that students in the intervention group experienced more anxiety in ACLS learning than did students in the control group.

The improvement in performance demonstrated by students in the intervention group during the HFMB “Mega Code” assessment is consistent with previous studies supporting the use of computer-based simulation as an educational tool.13,23,32,33

LIMITATIONS

Our study has several clear limitations. The most notable limitation was that the third-year emergency medicine resident who taught the HFMB ACLS course was not consistent. At least eleven different instructors participated in the training of students whose performances on the Mega Code assessment were included in this analysis. Thus, the authors were unable to control for this confounding variable in the analysis. In addition, blinding the instructors to the intervention group students was not enforceable. We did not perform a power analysis due to the difficulty in predicting an expected improvement in performance based on the implemented educational intervention. Incomplete data collection resulting from a combination of computer and human error led to the exclusion of several cohorts of third-year medical students enrolled in the ACLS course, comprised of both intervention and control groups, from data analysis. The authors were unable to monitor the amount of self-study time of students in the control group and were thus unable to determine if students in the intervention group may have spent more time with ACLS material in general than did those in the control group. The authors did not record the duration or frequency of use of the program. Students’ usage of the online, computer-based simulation program was not enforceable, which necessitated that students had to be self-motivated to use the program. Given the nature of this curriculum analysis study, the authors were unable to enforce use of the program with course failure or grade demotion. In practical application of this program, such enforcement would be feasible. Several students in the intervention group neglected to use the program and were thus excluded from the subsequent analysis. Additionally, intention to treat analysis was purposely abandoned based on the premise that a course instructor using the computer-based simulator would have the ability to monitor and enforce the use of the program. A post hoc intention to treat analysis yielded persistent statistically significant results. Students did record the number of previously run ACLS codes in the post megacode data collection form. Only one student in the control group had prior experience as a seasoned paramedic, and was thusly excluded from analysis. The authors did not collect any data regarding ultimate career goals. This may be a source of a confounding variable as those students going into acute care specialties may have not been randomly allocated to intervention and control groups. Finally, it is unclear whether the above listed findings are generalizable to other online, computer-based simulation programs.

CONCLUSION

We interpret the data to reflect the validity of the online, computer-based simulator as a valuable adjunctive ACLS teaching tool. Students with access to the simulation program outperformed students without access in both time to defibrillate ventricular fibrillation and time to pace symptomatic bradycardia. Notably, the students in the intervention group felt less confident than did the students in the control group. We speculate that the students in the intervention group may have felt less confident managing resuscitations due to their broadened perspective of ACLS material that was gained from their access to a multitude of ACLS scenarios within the computer-based simulation program. This would require further investigation to be confirmed.

Footnotes

Supervising Section Editor: Eric Snoey, MD

Full text available through open access at http://escholarship.org/uc/uciem_westjem

Address for Correspondence: Paul Y. Ko, MD. Department of Emergency Medicine, SUNY Upstate Medical University, EmSTAT Building, 550 East Genesee Street # 103C, Syracuse, NY 13202 Email: kop@upstate.edu.

Submission history: Submitted October, 30, 2013; Revision received August 11, 2014; Accepted September 8, 2014

Conflicts of Interest: By the WestJEM article submission agreement, all authors are required to disclose all affiliations, funding sources and financial or management relationships that could be perceived as potential sources of bias. The authors disclosed none.

REFERENCES

- Bradley, P. The history of simulation in medical education and possible future directions. Med Educ. 2006;40:254-62. [PubMed]

- Gaba DM. The future vision of simulation in health care. Qual Saf Health Care. 2004;(Suppl 1):i2-10. [PubMed]

- Cooper JB, Taqueti VR. A brief history of the development of mannequin simulators for clinical education and training. Qual Saf Health Care. 2004;13(Suppl 1):i11-8. [PubMed]

- Grenvik A, Schaefer J. From Resusci-Anne to Sim-Man: the evolution of simulators in medicine. Crit Care Med. 2004;32(Suppl):S56-7. [PubMed]

- McGaghie WC, Issenberg SB, Petrusa ER, et al. A critical review of simulation-based medical education research: 2003-2009. Med Educ. 2010;44:50-63. [PubMed]

- McGaghie WC. Research opportunities in simulation-based medical education using deliberate practice. Acad Emerg Med. 2008;15:995-1001. [PubMed]

- Bond WF, Lammers RL, Spillane LL, et al. The use of simulation in emergency medicine: a research agenda. Acad Emerg Med. 2007;14:353-64. [PubMed]

- McGaghie WC, Issenberg SB, Petrusa ER, et al. Effect of practice on standardised learning outcomes in simulation-based medical education. Med Educ. 2006;40:792-7. [PubMed]

- Langdale LA, Schaad D, Wipf J, et al. Preparing graduates for the first year of residency: are medical schools meeting the need? Acad Med. 2003;78:39-44. [PubMed]

- Ko PY, Scott JM, Mihai A, et al. Comparison of a modified longitudinal simulation-based advanced cardiovascular life support to a traditional advanced cardiovascular life support curriculum in third-year medical students. Teach Learn Med. 2011;23:324-30. [PubMed]

- Jensen ML, Hesselfeldt R, Rasmussen MB, et al. Newly graduated doctors’ competence in managing cardiopulmonary arrests assessed using a standardized Advanced Life Support (ALS) assessment. Resuscitation. 2008;77:63-8. [PubMed]

- Seedat A, Walmsley H, Rochester S. Advanced life support (ALS) and medical students: do we feel confident and competent after completing our training? Resuscitation. 2008;78:100-1. [PubMed]

- O’Leary FM, Janson P. Can e-learning improve medical students’ knowledge and competence in paediatric cardiopulmonary resuscitation? A prospective before and after study. Emerg Med Australas. 2010;22:324-9. [PubMed]

- O’Brien G, Haughton A, Flanagan B. Interns’ perceptions of performance and confidence in participating in and managing simulated and real cardiac arrest situations. Med Teach. 2001;23:389-395. [PubMed]

- Franklin GA. Simulation in life support protocols. Practical Health Care Simulations, 1st edition. 2004;393-404.

- Kapur PA, Steadman RH. Patient simulator competency testing: Ready for takeoff? Anesth Analg. 1998;86:1157-9. [PubMed]

- Schumacher L. Simulation in nursing education: Nursing in a learning resource center. Practical Health Care Simulations, 1st edition. 2004;169-75.

- Rodgers DL, Securro Jr S, Pauley RD. The effect of high-fidelity simulation on educational outcomes in an advanced cardiovascular life support course. Simul Healthc. 2009;4:200-6. [PubMed]

- DeMaria S Jr, Bryson EO, Mooney TJ, et al. Adding emotional stressors to training in simulated cardiopulmonary arrest enhances participant performance. Med Educ. 2010;44:1006-15. [PubMed]

- Hwang JCF, Bencken B. Integrating simulation with existing clinical education programs: dream and develop while keeping the focus on your vision. Clinical Simulation: Operations, Engineering, and Management. 1st edition. Burlington, Academic Press. 2008;95-105.

- Ferguson S, Beeman L, Eichorn M, et al. Simulation in nursing education: high-fidelity simulation across clinical settings and educational levels. Practical Health Care Simulations, 1st edition. 2004;184-204.

- Langdorf MI, Strom SL, Yang L, et al. High-Fidelity Simulation Enhances ACLS Training. Teach Learn Med. 2014;26(3):266-73. [PubMed]

- Bonnetain E, Boucheix JM, Hamet M, et al. Benefits of computer screen-based simulation in learning cardiac arrest procedures. Med Educ. 2010;44:716-22. [PubMed]

- Wayne DB, Butter J, Siddall VJ, et al. Mastery learning of Advanced Cardiac Life Support skills by internal medicine residents using simulation technology and deliberate practice. J Gen Intern Med. 2006;21:251-6. [PubMed]

- Wayne DB, Butter J, Siddall VJ, et al. Simulation-based training of internal medicine residents in Advanced Cardiac Life Support protocols: a randomized trial. Teach Learn Med. 2005;17:202-8. [PubMed]

- Wayne DB, Didwania A, Feinglass J, et al. Simulation-based education improves quality of care during cardiac arrest team responses at an academic teaching hospital: a case-control study. Chest. 2008;133:56-61. [PubMed]

- Ander DS, Heilpern K, Goertz F, et al. Effectiveness of a simulation-based medical student course on managing life-threatening medical conditions. Simul Healthc. 2009;4:207-11. [PubMed]

- Murray D, Boulet J, Ziv A, et al. An acute care skills evaluation for graduating medical students: a pilot study using clinical simulation. Med Educ. 2002;36:833-41. [PubMed]

- Rogers PL, Jacob H, Thomas EA, et al. Medical students can learn the basic application, analytic, evaluative, and psychomotor skills of critical care medicine. Crit Care Med. 2000;28:550-4. [PubMed]

- Wormuth, DW. An innovative method of teaching advanced cardiac-life support, or why Cosmic Osmo may someday save your life. Proc Annu Symp Comput Appl Med Care. 1991;864-5. [PubMed]

- Tanner TB, Gitlow S. A computer simulation of cardiac emergencies. Proc Annu Symp Comput Appl Med Care. 1991;864-5. [PubMed]

- Christenson J, Parrish K, Barabé S, et al. A comparison of multimedia and standard Advanced Cardiac Life Support learning. Acad Emerg Med. 1998;5:702-8. [PubMed]

- Schwid HA, Rooke GA, Ross BK, et al. Use of a computerized advanced cardiac life support simulator improves retention of advanced guidelines better than a textbook review. Crit Care Med. 1999; 27:821-4. [PubMed]

- Hoadley TA. Learning Advanced Cardiac Life Support: a comparison study of the effects of low- and high-fidelity simulation. Nurs Educ Perspect. 2009;30:91-5. [PubMed]

- Johnston JH, Driskell JE, Salas E. Vigilant and hypervigilant decision making. J Appl Psychol. 1997;82:614-22. [PubMed]

- Driskell JE, Salas E, Johnston J. Does stress lead to a loss of team perspective? Group Dyn. 1999;3:291-302.

- Cahill L, Haier RJ, Fallon J, et al. Amygdala activity at encoding correlated with long-term, free recall of emotional information. Proc Natl Acad Sci U S A. 1996;93:8016-21. [PubMed]

- Sandi C, Pinelo-Nava MT. Stress and memory: behavioral effects and neurobiological mechanisms. Neural Plast. 2007:79870. [PubMed]

- Price JW, Price JR, Pratt DD, et al. High-fidelity simulation in anesthesiology training: a survey of Canadian anesthesiology residents’ simulator experience. Can J Anesth. 2010;57:134-42. [PubMed]

- ElfrinkVL, Nininger J, Rohig L, Lee J. The case for group planning in human patient simulation. Nurs Educ Perspect 2009;30:83-6. [PubMed]

- Chen G, Gully SM, Whiteman J, et al. Examination of relationships among trait-like individual differences, state-like individual differences, and learning performance. J Appl Psychol. 2000;85:835-47. [PubMed]

- Bell BS, Kozlowski SW. Active learning: effects of core training design elements on self-regulatory processes, learning, and adaptability. J Appl Psychol. 2008;93:296-316. [PubMed]