| Author | Affiliation |

|---|---|

| Angela M. Mills, MD | University of Pennsylvania, Department of Emergency Medicine |

| Anthony J. Dean, MD | University of Pennsylvania, Department of Emergency Medicine |

| Frances S. Shofer, PhD | University of Pennsylvania, Department of Emergency Medicine |

| Judd E. Hollander, MD | University of Pennsylvania, Department of Emergency Medicine |

| Christine M. McCusker, RN | University of Pennsylvania, Department of Emergency Medicine |

| Michael K. Keutmann, BA | University of Pennsylvania, Department of Emergency Medicine |

| Esther H. Chen, MD | University of Pennsylvania, Department of Emergency Medicine |

ABSTRACT

Objectives

In many academic emergency departments (ED), physicians are asked to record clinical data for research that may be time consuming and distracting from patient care. We hypothesized that non-medical research assistants (RAs) could obtain historical information from patients with acute abdominal pain as accurately as physicians.

Methods

Prospective comparative study conducted in an academic ED of 29 RAs to 32 resident physicians (RPs) to assess inter-rater reliability in obtaining historical information in abdominal pain patients. Historical features were independently recorded on standardized data forms by a RA and RP blinded to each others’ answers. Discrepancies were resolved by a third person (RA) who asked the patient to state the correct answer on a third questionnaire, constituting the “criterion standard.” Inter-rater reliability was assessed using kappa statistics (κ) and percent crude agreement (CrA).

Results

Sixty-five patients were enrolled (mean age 43). Of 43 historical variables assessed, the median agreement was moderate (κ 0.59 [Interquartile range 0.37–0.69]; CrA 85.9%) and varied across data categories: initial pain location (κ 0.61 [0.59–0.73]; CrA 87.7%), current pain location (κ 0.60 [0.47–0.67]; CrA 82.8%), past medical history (κ 0.60 [0.48–0.74]; CrA 93.8%), associated symptoms (κ 0.38 [0.37–0.74]; CrA 87.7%), and aggravating/alleviating factors (κ 0.09 [−0.01–0.21]; CrA 61.5%). When there was disagreement between the RP and the RA, the RA more often agreed with the criterion standard (64% [55–71%]) than the RP (36% [29–45%]).

Conclusion

Non-medical research assistants who focus on clinical research are often more accurate than physicians, who may be distracted by patient care responsibilities, at obtaining historical information from ED patients with abdominal pain.

INTRODUCTION

A busy emergency department (ED) is a challenging site for collecting data for prospective clinical trials. Frequently, treating physicians are asked to enroll eligible patients and complete structured data forms, a time-consuming process that can interfere with clinical responsibilities. Research assistants (RAs) without formal medical training [e.g., undergraduate and post-baccalaureate students] have been used to assist in this process by identifying eligible patients, obtaining consent, documenting demographic information on standard data forms, and assisting with other data collection and management.1–3

Historical and physical examination features remain the basis for decision making about work-up and treatment of patients with acute abdominal pain; therefore, they are usually considered to be essential variables in research on this topic. Several studies have suggested that historical information obtained by medical providers may have significant inter-observer variability. In one such study, information recorded on standardized data sheets in a cohort of stroke patients revealed significant discrepancies in historical elements taken by six neurologists.4 In a study of chest pain patients, the historical features documented by nurse practitioners were less typical of angina pectoris compared to those documented by physicians after interviewing the same patients.5These studies highlight the importance of assessing the reliability of the data- collection instrument as an integral part of the research project.

No study to date has examined the reliability of the non-medical RAs in obtaining historical information for research. We designed and piloted a survey instrument containing standard, simple historical questions about abdominal pain. We hypothesized that non-medical RAs can reliably use this questionnaire and be at least as accurate as resident physicians (RPs) in obtaining historical information from patients with acute abdominal pain.

METHODS

Study Design

We conducted a prospective comparative study to evaluate the reliability of the historical features obtained from ED patients with abdominal pain using a standard questionnaire administered by RAs compared to RPs. Our Institutional Committee on Research involving Human Subjects at the University of Pennsylvania approved the study. Informed consent was obtained from all subjects.

Study Setting and Population

This study was conducted at an urban university hospital ED with a annual census of approximately 55,000 visits. Adult patients with acute abdominal pain were enrolled from April 6 to 22, 2007. A survey instrument with questions about historical features was completed independently for each patient by a RA and a RP. RAs are undergraduate and post-baccalaureate students enrolled in the Academic Associate Program,3, 6 a structured class at the University of Pennsylvania for which course credit is given. Students are responsible for attending research-related classes and working shifts in the ED during which they identify and enroll eligible patients for research projects, and in the current study, obtain historical information about patients with acute abdominal pain.

Study Protocol and Measurements

From 7 AM-midnight, seven days per week, the RAs identified and enrolled patients 18 years of age or older who presented with non-traumatic abdominal pain of less than 72 hours duration. Patients were excluded if they were pregnant, or if within the previous seven days they had sustained abdominal trauma or had an abdominal surgical procedure. A standardized questionnaire was completed independently by the RA and RP caring for the patient within 20 minutes of each other. The time of assessment was recorded on the data forms. Discrepancies between the two forms were resolved by a third person (RA) who was coached to specifically ask the patient: “we did not have a clear understanding of your answer to this question … [question repeated],” thus allowing patients to use either of their previous responses. This form was used as the “criterion standard.” Formal training sessions were provided to the RAs teaching them open-ended and neutral questioning techniques most likely to avoid influencing respondents.

Data Analysis

Descriptive data are presented as means ± standard deviation, frequencies, and percentages. Cohen’s kappa (κ) statistic and percent crude agreement (CrA), both with 95% confidence intervals (95% CIs), were used to measure inter-rater reliability. As described elsewhere, κ values range between 0 (chance agreement) and 1.00 (complete agreement); κ <0.2 represents poor agreement, 0.21–0.40 fair agreement, 0.41–0.60 moderate agreement, 0.61–0.80 good agreement, and 0.81–1.00 excellent agreement.7To summarize specific types of questions (e.g., past medical history) we present the median kappa values with interquartile ranges (IQRs). Data were analyzed using SAS statistical software (Version 9.1, SAS Institute, Cary, NC) and StatXact (Version 6.1, Cytel Software Corporation, Cambridge, MA).

RESULTS

Sixty-five patients with acute abdominal pain were surveyed by 29 RAs and 32 RPs. The median age of the abdominal pain patients was 43 years; 77% were female and 54% black. There were 49 variables, of which 43 were dichotomized responses. The remaining six historical variables were related to times (e.g. when was the last time you vomited), which proved highly variable and not easily dichotomized. These were excluded. Therefore, there were 2754 comparisons (some variables had fewer comparisons and some were restricted by gender), of which there were 458 discrepancies between RP and RA (17%).

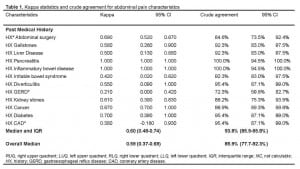

Inter-rater reliability measures for all historical variables are listed in Table 1. Overall, the median agreement was moderate (κ 0.59 [IQR 0.37–0.69]; CrA 85.9%) but varied across data categories: initial pain location (κ 0.61 [IQR 0.59–0.73]; CrA 87.7%), current pain location (κ 0.60 [IQR 0.47–0.67]; CrA 82.8%), past medical history (κ 0.60 [IQR 0.48–0.74]; CrA 93.8%), associated symptoms (κ 0.38 [IQR 0.37–0.74]; CrA 87.7%), and aggravating/alleviating factors (κ 0.09 [IQR −0.01–0.21]; CrA 61.5%).

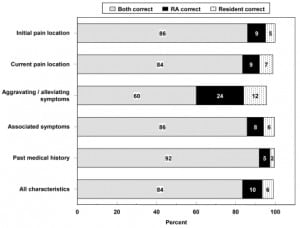

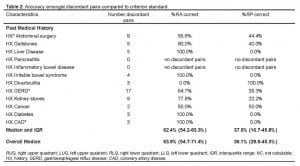

Overall, crude agreement for both groups was above 80% in all but one of the five general categories (Figure 1). Of the 458 discordant results between the RP and RA, criterion standard was available for 429 (94%). Of these disagreements, the RA more often agreed with the criterion standard (N=274, 64% [55%–71%] compared with the RP (N=155, 36% [29–45%]. (See Table 2.)

DISCUSSION

This study explores the inter-rater reliability of historical features obtained by RAs and RPs using a standard questionnaire in the evaluation of abdominal pain. We found an overall moderate agreement between RAs and RPs for 43 historical variables. There was good agreement for initial pain location and moderate agreement for current pain location and past medical history. For associated symptoms, there was fair agreement using the kappa statistic with a crude agreement of 88%. The poorest agreement was found for aggravating and alleviating factors in which information obtained by both groups of investigators was correct only 62% of the time. The mathematical properties of the κ statistic determine that low rates of discrepancy in infrequent clinical findings will result in lower κ scores than the same rate in common ones. This may have resulted in the wide range of alleviating and aggravating factors, any one of which is encountered relatively infrequently, appearing to result in lower κ scores.

Our results are consistent with prior studies of inter-rater reliability of physicians obtaining historical features, showing fair to excellent agreement (κrange 0.27–0.89) in hospitalized chest pain patients,8 fair to good agreement (κ range 0.37–0.69) in suspected stroke patients,9 and good agreement (κ range 0.58–0.71) in patients with suspected osteoarthritis.10 The current study also supports the findings of reports in which non-physician army medical practitioners demonstrated good overall agreement compared to physicians in the assessment of upper respiratory infection.11, 12 Specific to abdominal pain, our results were also consistent with those of a recent study comparing pediatric emergency physicians with surgeons in the evaluation of appendicitis in children showing fair to excellent agreement (κ range 0.33–0.82) for historical questions.13

Accurate data collection is an essential component of high quality clinical research. Prospectively collected data is generally considered to be of higher quality than data collected retrospectively or through chart abstraction. In many prospective studies conducted in the ED, the treating physician is asked to record subjects’ clinical data. This process may be cumbersome and time consuming. It may also be distracting or interfere with the physicians’ other responsibilities or create a fundamental conflict between the physician’s role as care provider and as researcher. To date, this is the first study to compare the ability of RAs with no formal medical training to RPs in obtaining historical information for research purposes. If, as the current study suggests, non-medical research assistants can obtain historical information about ED patients’ acute abdominal pain that is as accurate or more accurate than that obtained by the treating physician, the burden of data collection may be lifted from the treating physician, allowing it to be obtained and recorded in a less hurried and more meticulous manner. This may result in higher quality medical research on this topic in the ED setting.

LIMITATIONS

As this study was conducted in a single institution with an established Academic Associate Program, our results may not be generalizable to other practice settings. Under-enrollment of patients evaluated in the overnight hours, the most acutely ill patients, and patients who did not consent to participate in the study may have caused some selection bias. The authors do not know of any “gold standard” available to be certain that patient responses to historical items are accurate. As such, this study design was our best attempt to study accuracy and inter-rater reliability in obtaining historical data for patients with abdominal pain. It is possible that patients may have been prompted into providing answers that were consistent with one of their prior responses when being interviewed by the third person for the “criterion standard” form. It is also possible that the third-person interviewer might have had a tendency to “coach” respondents to resolve discrepancies in a way that supported the data obtained by the first RA. Neither RAs nor RPs were blinded to the purpose of the study, which may have biased our results.

CONCLUSION

Non-medical research assistants focused on clinical research are often more accurate than physicians, who may be distracted by patient care responsibilities, at obtaining data for clinical research. They can reliably use a standardized data collection sheet to obtain historical information from patients who present to the ED with acute abdominal pain.

Footnotes

Supervising Section Editor: Craig L. Anderson, MPH, PhD

Submission history: Submitted April 15, 2008; Revision Received August 26, 2008; Accepted November 09, 2008

Full text available through open access at http://escholarship.org/uc/uciem_westjem

Address for Correspondence: Angela M. Mills, MD. Department of Emergency Medicine, Ground Floor, Ravdin Building, Hospital of the University of Pennsylvania, 3400 Spruce Street, Philadelphia, PA 19104-4283

Email: millsa@uphs.upenn.edu

Conflicts of Interest: By the WestJEM article submission agreement, all authors are required to disclose all affiliations, funding sources, and financial or management relationships that could be perceived as potential sources of bias. The authors disclosed none.

REFERENCES

1. Bradley K, Osborn HH, Tang M. College research associates: a program to increase emergency medicine clinical research productivity. Annals of Emergency Medicine.1996;28:328–333. [PubMed]

2. Cobaugh DJ, Spillane LL, Schneider SM. Research subject enroller program: a key to successful emergency medicine research. Acad Emerg Med. 1997;4:231–233. [PubMed]

3. Hollander JE, Valentine SM, Brogan GX., Jr Academic associate program: integrating clinical emergency medicine research with undergraduate education. Acad Emerg Med.1997;4:225–230. [PubMed]

4. Shinar D, Gross CR, Mohr JP, et al. Interobserver variability in the assessment of neurologic history and examination in the Stroke Data Bank. Archives of Neurology.1985;42:557–565. [PubMed]

5. Hickam DH, Sox HC, Jr, Sox CH. Systematic bias in recording the history in patients with chest pain. Journal of Chronic Diseases. 1985;38:91–100. [PubMed]

6. Hollander JE, Singer AJ. An innovative strategy for conducting clinical research: the academic associate program. Acad Emerg Med. 2002;9:134–137. [PubMed]

7. Brennan P, Silman A. Statistical methods for assessing observer variability in clinical measures. BMJ. 1992;304:1491–1494. [PMC free article] [PubMed]

8. James TL, Feldman J, Mehta SD. Physician variability in history taking when evaluating patients presenting with chest pain in the emergency department. Acad Emerg Med. 2006;13:147–152. [PubMed]

9. Hand PJ, Haisma JA, Kwan J, et al. Interobserver agreement for the bedside clinical assessment of suspected stroke. Stroke. 2006;37:776–780. [PubMed]

10. Peat G, Wood L, Wilkie R, et al. How reliable is structured clinical history-taking in older adults with knee problems? Inter- and intraobserver variability of the KNE-SCI.Journal of Clinical Epidemiology. 2003;56:1030–1037. [PubMed]

11. Wilson FP, Wilson LO, Wheeler MF, et al. Algorithm-directed care by nonphysician practitioners in a pediatric population: Part I. Adherence to algorithm logic and reproducibility of nonphysician practitioner data-gathering behavior. Medical Care.1983;21:127–137. [PubMed]

12. Wood RW, Diehr P, Wolcott BW, et al. Reproducibility of clinical data and decisions in the management of upper respiratory illnesses: a comparison of physicians and non-physician providers. Medical Care. 1979;17:767–779. [PubMed]

13. Kharbanda AB, Fishman SJ, Bachur RG. Comparison of pediatric emergency physicians’ and surgeons’ evaluation and diagnosis of appendicitis. Acad Emerg Med.2008;15:119–125. [PubMed]