| Author | Affiliation |

|---|---|

| Phaedra S. Corso, PhD, MPA | University of Georgia, College of Public Health, Department of Health Policy and Management, Athens, Georgia |

| Nathaniel Taylor, MPH | University of Georgia, College of Public Health, Department of Health Policy and Management, Athens, Georgia |

| Jordan Bennett, MPH | University of Georgia, College of Public Health, Department of Health Policy and Management, Athens, Georgia |

| Justin Ingels, MPH, MS | University of Georgia, College of Public Health, Department of Health Policy and Management, Athens, Georgia |

| Shannon Self-Brown, PhD | Georgia State University, School of Public Health, Atlanta, Georgia |

| Daniel J. Whitaker, PhD | Georgia State University, School of Public Health, Atlanta, Georgia |

Introduction

Methods

Results

Discussion

Limitations

Conclusion

INTRODUCTION

In adopting evidence-based practices (EBP), program administrators most frequently focus on program effectiveness. But there is growing recognition of the importance of program cost and of economic analysis for allocating scarce resources for prevention and intervention programs.1 Economic analysis includes the assessment of programmatic costs using a micro-costing approach (precise individual resource valuation) to value the resources required to implement programmatic processes and activities so that programs can be compared to each other.2–5 Differences in program cost are typically driven by differences in program length, staff requirements to implement the program and materials. However, another key source of program cost is the implementation strategy.

Program administrators must consider the costs to adopt or implement a program. Translation or implementation science focuses on the processes by which EBP are implemented. Less rigorous implementation procedures often fail to yield implementation with fidelity, which is needed to achieve program outcomes.6 More rigorous strategies are more expensive, but there is evidence that they are needed to achieve implementation with fidelity.7,8 Thus, the consideration of implementation costs is an important area of study. That is, just as intervention scientists have studied how much intervention is needed for behavior change, implementation scientists must study how much implementation is necessary to achieve fidelity.

To date, however, few studies have considered costs in implementation research,9 and fewer still have specifically focused on the costs of implementing EBP in the field of child maltreatment (CM) prevention.10 To our knowledge there are no studies that have calculated implementation costs for variants on a model and then related those costs to implementation outcomes. This paper presents a calculation of marginal implementation costs for 2 variants of a training program for the SafeCare® model, an evidence-based parenting model for child maltreatment prevention. SafeCare® has been disseminated to child welfare systems across 20 U.S. states.

The SafeCare® dissemination model includes a “train-the-trainer” component in which staff external to the purveyor (the National SafeCare® Training and Research Center [NSTRC]) are trained over time to train local staff. The training of trainers is notoriously difficult and often fails because of the lack of follow-up support.11 In the study reported here, we trained trainers under 2 different models to examine the impact of trainee and client outcomes. A first step in understanding the impact of the 2 models is to calculate marginal cost differences in the 2 training models. The 2 training models differed primarily in their provision of support to new trainers following completion of the train-the-trainer program. Trainers were randomly assigned into 1 of 2 models for training, standard or enhanced. In the “standard” approach, the model includes a 5-day workshop with skill demonstration and proficiency improvement through role-playing activities and live training sessions. The model includes some ongoing support from NSTRC training staff, and in turn, trainers provide some support to the providers they train. The second model, the “enhanced” approach, provided extensive ongoing consultation from NSTRC training staff for 6 months upon completion of the trainer training workshop.

In this paper, we present data collected to determine marginal cost differences between the 2 models. Although we do not present data on implementation and client outcomes, this paper serves as an example of how data collection on this topic can be accomplished and how marginal costs are computed.

METHODS

This analysis considers those costs that are marginally different between the 2 train-the-trainer implementation models from the provider perspective. Costs for all training activities up through the initial workshop were not included, nor were all non-personnel costs such as space and supplies because those resources did not vary for the standard versus enhanced model. Where marginal resources, and therefore costs, were incurred between the 2 models was in personnel time required by NSTRC staff (the trainers in the model), trainers (those being trained), and coaches (those providing SafeCare® services who are directly supervised by the trainers). All time spent by personnel were prospectively assessed from weekly time diaries completed by trainers over 2 8-week periods across 2 different coaches between July 2010 and September 2011. We calculated total time required to implement the 2 training models by multiplying the average 8-week time costs of each model by 3.25 to assess total time for the 26-week (6-month) program. Activity categories included: providing fidelity monitoring, feedback, reviewing coaching sessions, preparation and tracking of fidelity, coach-led team meetings, other coach support (support other than routine fidelity monitoring feedback sessions documented under the feedback activity category), travel, and receiving support from NSTRC staff. These same time diaries provided information on the time spent by coaches from 2 of the activity categories (fidelity feedback and other coach support) and the time spent by NSTRC staff from one of the activity categories (support from NSTRC staff). We excluded from the analysis 2 trainers who did not participate for the full 8 weeks of data collection.

We calculated total costs for personnel time by using hourly wages plus fringe, if applicable, in 2011 U.S. dollars. Trainers received $30 per hour with no fringe benefits. Coaches received $34 per hour and NSTRC staff $22 per hour, with an additional 27% in fringe benefits for each. All salaries and benefits remained constant throughout the intervention. Total costs were summarized at the personnel level (staff, trainer, or coach), activity level, and type of contact within most of the activity categories (in-person, by phone, or through email). We calculated significant differences in time and cost for each implementation model using t-test in Stata version 12.12

RESULTS

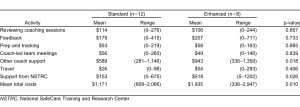

Table 1 reports the mean total personnel time by each train-the-trainer model: standard (n=12) versus enhanced (n=8), and by personnel and activity categories. Trainers in the enhanced model spent significantly more time compared to trainers in the standard model (33.59 versus 21.5 hours per trainer, p=0.025). This increased time was concentrated primarily in 2 activities, other coach support (12.94 vs. 8.08 hours per trainer, p=0.018) and support from staff (8.94 versus 2.64 hours per trainer, p=0.026). Trainers in the enhanced model also spent significantly more time than trainers in the standard model engaged in in-person time with coaches and staff (8.67 versus 1.49 hours per trainer, p=0.0023).

Mean personnel time, in hours, for the standard versus enhanced implementation models implemented over a 6-month time period.

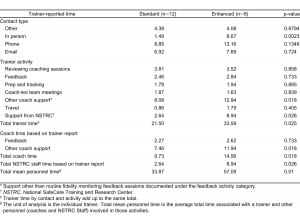

Table 2 reports the mean total cost of all personnel time by activity category. The mean total cost for the enhanced model was $1,935 and $1,171 for the standard model, a statistically significant difference of $764 (p=0.010). Costs were significantly different for 2 activity categories, other coach support ($943 versus $589, p=0.018) and support from staff ($518 versus $153, p=0.026).

DISCUSSION

As child welfare systems move towards adopting evidence-based approaches for preventing child neglect outcomes, information on the costs of different implementation strategies will be essential. In this study, where an enhanced train-the-trainer model was compared to a standard model, the marginal cost differences between the 2 were significantly different but were not so different to make the enhanced model necessarily cost prohibitive from a programmatic perspective. These differences in costs are important when one considers widespread implementation and dissemination of the SafeCare® program, especially when comparing costs to outcomes.

A focus on costs of implementation methods begs the question of how rigorous implementation can be done at the lowest cost. One possibility for reducing implementation cost is via the use of technology and social media. Technology has a strong role to play both in delivering interventions to parents and in training and technical support provided to staff being trained.13–16 Many purveyors of EBP have developed web-based training courses, reducing the need for expert trainers to conduct workshops.17 Support following training may be conducted more effectively via telemedicine technologies that allow for real-time communication without the necessity of travel,18 including the use of mobile technologies such as Skype or Facetime for services delivered in the home. Social media (e.g., Facebook) can also be used as support tool for trainers or providers in a learning community. The impact and cost of these technologies is largely unknown; however, if they reduce expert personnel time, they are likely to reduce overall costs.

LIMITATIONS

Several important limitations should be considered with the results of this study. First, while the methods used to compare costs can be applied to other EBP research, specific categories are only applicable to SafeCare. Second, the small sample size may have skewed the results making the findings of this study erroneous. Third, although critical for understanding the differences between different implementation strategies, this cost analysis does not allow us to assess the relative cost effectiveness of the standard versus enhanced train-the-trainer model. Thus, the next step in this research would be to compare marginal cost differences to marginal differences in outcomes between the standard and enhanced models. Specifically, it will be important to compare provider fidelity to the model (a key implementation outcome) and client behavior change to understand whether the enhanced model provides any value for its added cost. This will provide program purveyors and decision makers an accurate representation of the cost of incremental improvements in outcomes between the 2 models.

CONCLUSION

This paper demonstrates cost differences between 2 different implementation models for training trainers in the EBP. Cost effectiveness of implementation processes is an important step for decision makers who wish to implement SafeCare®. Understanding the overall cost, the source of cost differences and the cost effectiveness of EBP will allow them to choose the best processes within a given budget for maximal impact.

Footnotes

Supervising Section Editor: Monica H. Swahn, PhD, MPH

Full text available through open access at http://escholarship.org/uc/uciem_westjem

Conflicts of Interest: By the WestJEM article submission agreement, all authors are required to disclose all affiliations, funding sources and financial or management relationships that could be perceived as potential sources of bias. The authors disclosed none.