| Author | Affiliation |

|---|---|

| Jeffrey Druck, MD | University of Colorado School of Medicine, Division of Emergency Medicine, Denver, CO |

| Morgan A. Valley, MS | University of Colorado School of Medicine, Division of Emergency Medicine, Denver, CO |

| Steven R. Lowenstein, MD, MPH | University of Colorado School of Medicine, Division of Emergency Medicine, Denver, CO |

ABSTRACT

Introduction:

The Residency Review Committee training requirements for emergency medicine residents (EM) are defined by consensus panels, with specific topics abstracted from lists of patient complaints and diagnostic codes. The relevance of specific curricular topics to actual practice has not been studied. We compared residency graduates’ self-assessed preparation during training to importance in practice for a variety of EM procedural skills.

Methods:

We distributed a web-based survey to all graduates of the Denver Health Residency Program in EM over the past 10 years. The survey addressed: practice type and patient census; years of experience; additional procedural training beyond residency; and confidence, preparation, and importance in practice for 12 procedures (extensor tendon repair, transvenous pacing, lumbar puncture, applanation tonometry, arterial line placement, anoscopy, CT scan interpretation, diagnostic peritoneal lavage, slit lamp usage, ultrasonography, compartment pressure measurement and procedural sedation). For each skill, preparation and importance were measured on four-point Likert scales. We compared mean preparation and importance scores using paired sample t-tests, to identify areas of under- or over-preparation.

Results:

Seventy-four residency graduates (59% of those eligible) completed the survey. There were significant discrepancies between importance in practice and preparation during residency for eight of the 12 skills. Under-preparation was significant for transvenous pacing, CT scan interpretation, slit lamp examinations and procedural sedation. Over-preparation was significant for extensor tendon repair, arterial line placement, peritoneal lavage and ultrasonography. There were strong correlations (r>0.3) between preparation during residency and confidence for 10 of the 12 procedural skills, suggesting a high degree of internal consistency for the survey.

Conclusion:

Practicing emergency physicians may be uniquely qualified to identify areas of under- and over-preparation during residency training. There were significant discrepancies between importance in practice and preparation during residency for eight of 12 procedures. There was a strong correlation between confidence and preparation during residency for almost all procedural skills, reenforcing the tenet that residency training is the primary locus of instruction for clinical procedures.

INTRODUCTION

How do we assess what we need to teach? Experts in instructional design agree that a periodic needs assessment is a critical element when planning or revising the content of any educational endeavor.1 For emergency medicine (EM) residencies, the Residency Review Committee follows the 2007 Model of the Clinical Practice of Emergency Medicine.2This model curriculum, first released in 2001, was based on expert panel recommendations. It has undergone extensive revisions and now incorporates empirical data as well as expert review. The 2003 release notes that “the ACEP Academic Affairs Committee has used the emergency medicine model to survey emergency medicine residency program directors and recent residency graduates to identify curricula gaps and educational needs.”3 However, this evaluation method of post-residency survey, although used in other fields, has never been applied to specific content areas in EM.4

Practicing emergency physicians (EP) may be in the best position to identify areas of over- and under-preparation in their residency programs. They may be uniquely qualified to compare their training with the demands of clinical practice in the “real world.”5 Therefore, we examined procedural skill training, a subset of our residency curriculum. We surveyed recent graduates to compare “preparation during residency training” and “importance in clinical practice” for 12 common procedural skills.

METHODS

The principal objective of this study was to identify areas of over- and under-preparation for commonly taught EM procedures. We distributed a web-based survey to all physicians who had graduated from the Denver Health Emergency Medicine Residency program in the past 10 years (1997–2007). The study protocol was approved by the Colorado Multiple Institutional Review Board.

Survey Design

We convened an expert panel of five senior EM clinicians from our institution. After evaluating the list of procedures from the 2007 Clinical Practice Model (Appendix 1, Procedures)2, panel members concluded that some procedures (i.e., central line insertion and orotracheal intubation) were so clearly important and routinely performed in residency training that they should be excluded from the survey. Instead, the panel agreed to focus on 12 procedures that it judged to be “important but not emergent.” These procedures included: extensor tendon repair; transvenous pacing; lumbar puncture; applanation tonometry; arterial line placement; anoscopy; CT scan interpretation; diagnostic peritoneal lavage; slit lamp usage; ultrasonography; compartment pressure measurement; and procedural sedation.

The 46-item survey included demographic information (age and gender) of graduates, years of practice since graduation, board certification and fellowship or other post-residency training. Survey questions also addressed current ED practice type (academic, military, private, urgent care or other), geographic locale (urban, suburban or rural), and census.

A principal objective of this study was to compare preparation during residency trainingand importance in practice for these 12 procedural skills. Preparation during training was ascertained by asking this question: “Thinking back to residency and keeping in mind the didactic and practical instruction you received, please rate how well your residency training program prepared you to perform each procedure, with ‘four’ being excellent instruction and great preparation and ‘one’ being poor preparation with no instruction at all.” Importance during practice was measured by asking, “Please rate the importance of each of these procedures in your current practice currently, with ‘four’ being extremely important and ‘one’ being not important at all.”

To assess the internal consistency of the survey, we also calculated “confidence” scores for each procedure, using a similar four-point Likert scale. We hypothesized that preparation during training and confidence would be linked; we tested for an association between “confidence” and “preparation during residency training,” by calculating Pearson’s correlation coefficients for each procedural skill. Measuring these correlations also provided a means to test the hypothesis that residency is a primary locus of instruction for procedural training.

Several EM clinicians pilot-tested the survey in order to improve the clarity of the questions and response choices and to test the electronic interface. Criterion validity was strengthened by using procedures included in the 2007 Clinical Practice Module.2,6

The survey was distributed by email to all 126 residency graduates from the previous 10 years. The email contained a link to a commercial survey web site (Zoomerang.com®). One reminder email was sent to all graduates, whose email addresses were valid at the time of initial survey deployment.

Statistical Analysis

The analysis of the survey data proceeded in two steps. First, we summarized demographic characteristics of participants and their survey responses using means and standard deviations or medians and ranges for continuous variables; proportions and 95 percent confidence intervals were computed for categorical variables.

Second, we performed bivariate analyses to test for differences between mean preparation and mean importance scores for each procedure. To measure the significance of the differences, paired sample t-tests and 95% confidence intervals were calculated.

The survey questions, Likert scales and statistical methods utilized in this study were based on earlier residency training evaluations.5,6,7,8

RESULTS

Among 126 eligible participants, 74 (59%) completed the survey. The median annual ED census was 50,000 (range 10,000 to 130,000), and the median number of years in practice was 5.0 (range 0.5 to 14). The majority (72%) were practicing in private settings; smaller proportions were in academic (23%), urgent care (3%) or military (1%) practices. Fifty-six percent of graduates described their ED practice settings as “urban;” 36% “suburban,” and 8% “rural. All participants were either board-certified or had been in practice for less than the time required for board eligibility. Five participants (7%) were fellowship trained.

Analysis of Preparation vs. Importance

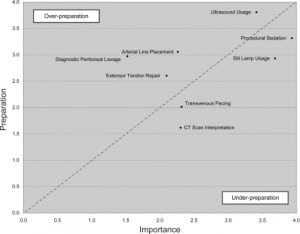

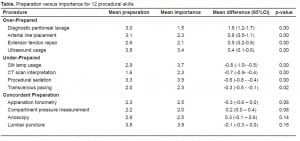

When preparation and importance scores for the 12 procedures were compared, eight of the 12 procedures showed statistically significant differences (Table). Preparation exceeded importance for four procedures: extensor tendon repair; arterial line placement; diagnostic peritoneal lavage; and ultrasonography. Importance exceeded preparation in four areas: transvenous pacing; CT scan interpretation; slit lamp usage; and procedural sedation. The figure highlights these eight procedural skills for which there was significant over- or under-preparation. Four procedures (lumbar puncture, applanation tonometry, anoscopy and compartment pressure measurement) appeared to be appropriately emphasized during training.

When survey participants were asked about their “other” sources of procedural training, 74% cited textbooks, 59% “trial and error,” 30% continuing education courses, and 30% procedural training from colleagues. When asked about additional procedures that were important but not taught adequately, participants mentioned billing procedures and advanced airway techniques most frequently.

Preparation and confidence scores were significantly and strongly correlated (r >0.3) for 10 of the 12 procedures, suggesting a high degree of internal consistency. The strong association between residency training and confidence also suggested that residency was a major source of procedural skill training.

There was no association between the number of years in practice and preparation, importance or confidence for any procedural skill. Physicians practicing in rural areas were more likely than their urban or suburban counterparts to rate CT scan interpretation as “important” or “very important” (p = 0.16); this difference was not statistically significant, perhaps due to the small number of rural emergency physicians participating in the survey.

DISCUSSION

In this study we looked to recent graduates to educate us about deficiencies in our residency training program. This technique, and the statistical methods we used to compare preparation and importance, were first suggested by Kern et al,5 who proposed that “information from former trainees [can] provide a view of training that would be based on the demands of practice in the real world .”

These findings suggest that modest changes in our curriculum may be necessary to bring preparation more in line with the demands of practice. Of note, two procedures demonstrated marked differences in preparation and importance. Graduates reported significant under-training in CT scan interpretation; in contrast, they were over-trained in diagnostic peritoneal lavage.

Similar studies can easily be performed by other residency programs to identify areas of under- and overtraining. These techniques may also prove useful in evaluating other skill and cognitive areas of the EM core curriculum, such as critical care, toxicology, orthopedics or other subspecialty disciplines.

The ACGME Outcomes project7 warns that “[residency] programs are expected to show evidence of how they use educational outcomes data to improve individual resident and overall program performance.” This study illustrates one technique that program directors can use to meet the ACGME requirements. Without gathering periodic feedback from recent graduates, it will be more difficult to effect needed curricular change.

LIMITATIONS

This study has several important limitations. First, it is based on a relatively small sample of recent graduates from a single EM residency program. Also, we only studied 12 selected procedures. Our results may not apply to other residency programs or their graduates, or to other procedural skills. The sample size also limits the precision of our results and the power to detect differences among residents, practice settings and specific procedures. Additionally, the survey response rate was 59%, which is acceptable but not ideal. We could not collect any information about graduates who did not respond to the survey; therefore, we cannot assess the direction or magnitude of any nonparticipation bias. Also, all of the data come from self-reports, and there is no assurance that response are reliable or valid. Nonetheless, our survey and analytic methods were adapted from previous residency training evaluations.5,6,7,8

We also acknowledge that some differences between preparation and importance scores may be statistically, but not educationally, significant. When Plauth et al.8 studied hospitalists’ perceptions of their training needs, they arbitrarily defined “meaningful differences” as those in which the difference between the mean preparation and mean importance scores were at least 1.0. Applying that standard to our study, three procedures (extensor tendon repair, CT scan interpretation and diagnostic peritoneal lavage) demonstrated “educationally meaningful differences.”

We also learned in this investigation that some procedures are likely to receive higher or lower importance ratings in different EM practice settings. As noted earlier, rural practitioners assigned a higher importance rating to CT scan interpretation. Also, our graduates frequently reported that three procedures – thoracotomy, lateral canthotomy and transvenous pacing – were emphasized in training but were unimportant in their practices. It is interesting to note that although transvenous pacing was considered undertrained, it also was selected as unimportant. This result may derive from a division of respondents classifying the procedure as very important and another subset listing it as unimportant. Graduates were quick to note that if they were practicing in a different setting, these procedures might indeed be critical.

Finally, we did not measure the intensity of training or the methods of instruction for these 12 procedures. We did not review residents’ procedure logs or ask them to estimate their training hours or the number of procedures they performed during residency or after graduation. Also, it is highly likely that procedural instruction varied during the 10-year study period. For example, in training and practice there was a steady decrease in attention paid to diagnostic peritoneal lavage, while there was a sharp increase in emphasis on ultrasonography, CT scanning and procedural sedation. Also, newer instructional technologies (for example, simulations) may have been introduced in recent years. In our study, we were unable to measure or adjust for these temporal trends in procedural training.

In summary, it cannot be assumed that the results of this study will apply directly to other residencies or their graduates. However, the key message is that this evaluation technique may prove useful as other program directors assess their own curricula for areas of over- and under-training, taking into account the varied settings in which graduates practice.

CONCLUSION

Frequently, the design and evaluation of residency training programs are guided by national surveys, consensus reports and the dissemination of model curricula. However, local, program-specific evaluations are also important and can only be provided by recent graduates, who are uniquely qualified to identify areas of under- or over-training for the “real-world” perspective. Post-graduate surveys may be an important new paradigm for residency program evaluation and reform.

Footnotes

The authors would like to thank the following people for assistance and support during all phases of this study: Dr. Carol Hodgson, Dr. Gretchen Guiton, Dr. Alison Mann, and the Teaching Scholars Program at the University of Colorado Denver, School of Medicine

Supervising Section Editor: Scott E. Rudkin MD, MBA

Submission history: Submitted July 31, 2008; Revision Received January 15, 2009; Accepted January 15, 2009

Full text available through open access at http://escholarship.org/uc/uciem_westjem

Address for Correspondence: Jeff Druck, MD, Division of Emergency Medicine, Department of Surgery, University of Colorado, Denver, 12401 E. 17th Ave. B-215 Aurora, CO 80045

Email: Jeffrey.Druck@UCDenver.edu

Conflicts of Interest: By the WestJEM article submission agreement, all authors are required to disclose all affiliations, funding sources, and financial or management relationships that could be perceived as potential sources of bias. The authors disclosed none.

REFERENCES

1. Kilian BJ, Binder LS, Marsden J. The emergency physician and knowledge transfer: continuing medical education, continuing professional development, and self-improvement. Acad Emerg Med. 2007;14:1003–7. [PubMed]

2. 2007 EM Model Review Task Force. Thomas HA, Beeson MS, Binder LS, et al. The 2005 Model of the Clinical Practice of Emergency Medicine. Ann Emerg Med. 2008;52:e1–17.[PubMed]

3. Thomas HA, Binder LS, Chapman DM, et al. The 2003 Model of the Clinical Practice of Emergency Medicine: The 2005 Update. Acad Emerg Med. 2006;13:1070–1073.[PubMed]

4. Conigliaro RL, Hess R, McNeil M. An innovative program to provide adequate women’s health education to residents with VA-based ambulatory care experiences. Teach Learn Med. 2007;19:148–53. [PubMed]

5. Kern DC, Parrino TA, Korst DR. The lasting value of clinical skills. JAMA.1985;254:70–6. [PubMed]

6. Wayne DB, DaRosa DA. Evaluating and enhancing a women’s health curriculum in an internal medicine residency program. J Gen Intern Med. 2004;19:754–759.[PMC free article] [PubMed]

7. Accreditation Council for Graduate Medical Education The ACGME Outcome Project: An IntroductionRev 2005. Available at: www.acgme.org/outcome/project/OPintrorev1_7-05.ppt Accessed April 19, 2008.

8. Plauth WH, 3rd, Pantilat SZ, Wachter RM, et al. Hospitalists’ perceptions of their residency training needs: results of a national survey. Am J Med. 2001;111:247–54.[PubMed]

9. Hockberger RS, Binder LS, Graber MA, et al. American College of Emergency Physicians Core Content Task Force II. The model of the clinical practice of emergency medicine. Ann Emerg Med. 2001;37:745–70. [PubMed]