| Author | Affiliation |

|---|---|

| James M. Leaming, MD | Penn State University College of Medicine, Hershey, Pennsylvania |

| Spencer Adoff, MD | Penn State University College of Medicine, Hershey, Pennsylvania |

| Thomas E. Terndrup, MD | Penn State University College of Medicine, Hershey, Pennsylvania |

Introduction Methods Results Discussion Conclusion

ABSTRACT

Introduction: We sought to develop and test a computer-based, interactive simulation of a hypothetical pandemic influenza outbreak. Fidelity was enhanced with integrated video and branching decision trees, built upon the 2007 federal planning assumptions. We conducted a before-and-after study of the simulation effectiveness to assess the simulations’ ability to assess participants’ beliefs regarding their own hospitals’ mass casualty incident preparedness.

Methods: Development: Using a Delphi process, we finalized a simulation that serves up a minimum of over 50 key decisions to 6 role-players on networked laptops in a conference area. The simulation played out an 8-week scenario, beginning with pre-incident decisions. Testing: Role-players and trainees (N=155) were facilitated to make decisions during the pandemic. Because decision responses vary, the simulation plays out differently, and a casualty counter quantifies hypothetical losses. The facilitator reviews and critiques key factors for casualty control, including effective communications, working with external organizations, development of internal policies and procedures, maintaining supplies and services, technical infrastructure support, public relations and training. Pre- and post-survey data were compared on trainees.

Results: Post-simulation trainees indicated a greater likelihood of needing to improve their organization in terms of communications, mass casualty incident planning, public information and training. Participants also recognized which key factors required immediate attention at their own home facilities.

Conclusion: The use of a computer-simulation was effective in providing a facilitated environment for determining the perception of preparedness, evaluating general preparedness concepts and introduced participants to critical decisions involved in handling a regional pandemic influenza surge.

INTRODUCTION

The probability of a highly pathogenic influenza pandemic has been a topic of interest among healthcare providers, the public, and health policy personnel at regional, state, national and international levels. Since the first influenza pandemic was described in 1580, over 30 recognized influenza pandemics have occurred. Three, occurring in the last century, varied in lethality from 1 million deaths worldwide in 1968 to 50 million deaths worldwide in 1918.1 The Influenza A virus (H5N1), or Avian influenza, and H1N1, or Swine Flu, have been of more recent concern. First identified in Hong Kong in 1997, the outbreak of the H5N1 flu strain ultimately resulted in 18 infections and 6 deaths.2 Since its original mutation, the H5N1 avian flu virus has spread to over 15 countries, infecting 552 people and killing 322 as of April 2011, a mortality rate of approximately 58%.3 If the H5N1 strain had mutated and developed the ability to transfer via human contact, the world could have been on the brink of a pandemic.4 More currently, the H1N1 virus was declared a pandemic in 2009 with an estimated amount of infected persons between 43 to 89 million, through April, 2010.5

The civilian population depends on the preparedness and response of the medical community for significant illness management and crisis mitigation, yet preparing for, and handling any pandemic influenza outbreak is a difficult task. Methods to enhance preparedness may include educational sessions, as well as table-top and large-scale exercises. Current literature has shown that computer-based high-fidelity simulations may be effective as training tools.6 A computer-based simulation of an influenza outbreak provides a repeatable approach to stimulate integrated decision-making, and discuss thought processes.7 Reports demonstrate that their use may be an authentic, low-risk learning environment that teaches teamwork competencies and promotes insightful and systematic practice.8Higher-fidelity simulations (i.e. those that produce a realistic experience for trainees through use of multimedia inputs) have also been shown to receive more positive feedback from participants than lower-fidelity simulations (e.g. slower paced, pre-determined table-top and paper-based exercises), suggesting that they are a more effective method of training and education, stemming from their authentic nature and real time decision-making components.9 By definition, high-fidelity simulation more closely resembles the actual event it is representing, such as using realistic materials, equipment, story boards, sounds, and visual aids. Since high-fidelity models represent scenarios in a more realistic fashion, in theory, they are more likely to produce results similar to real life.

We report briefly on the development and initial testing of this computer-based simulation for a putative pandemic influenza outbreak. We used a Delphi-method for initial development and prioritization of learning principles. We then tested the simulation on regional hospital participant volunteers, in order to quantify the before-and-after simulation knowledge about 7 key areas.

METHODS

Development

We used a Delphi-method, or structured communication technique with content and regional experts and using the federal assumptions of the 2007 National Incident Management System (NIMS) for a pandemic outbreak, to derive key categories and decisions to build into the computer programming. Three to six rounds of subject and key role-player expert scenarios were performed with subject matter experts (SMEs) until the computer program was finalized and placed into a run-mode. The final simulation intended to produce documentable and reproducible actions that could be performed during these trainings. These were not intended to teach definitive care should an actual outbreak occur. These practice decisions were intended to broaden trainees’ understanding of evolving crises, enhance insight into current preparedness gaps, and allow them to test their own strategies for response, prior to an incident. The main objective was to employ this simulation and assess participants’ beliefs regarding their own hospitals’ mass casualty incident preparedness, which was measured using pre- and post-event surveys.

Participant Population

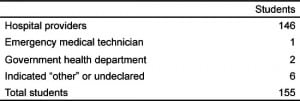

Employees of 7 hospitals within south central Pennsylvania, not part of the development team, were participants in testing this system. All participants were volunteers who were invited to attend the educational session. The study group totaled 155 personnel. The group was comprised of hospital-based personnel (Table 1). Participating hospitals ranged from a small community hospital of 125 beds to much larger medical facilities located in micro-metropolitan settings.

Simulation Workshop Session

The primary goal of this simulation testing experience was to introduce personnel to the critical decisions involved in handling a pandemic influenza surge with overwhelming patient volume and assess their opinion of current preparedness models at their home institution. Methods to improve decision making or how to impact home institution current models, was not part of this model of education. The session represented an introductory exercise intended to inform and raise awareness about organizational surge preparedness gaps. Desired outcomes were that attendees: (1) understand that mitigation is highly interdependent because effects of decisions cascade to affect other responders in the surge response; and (2) will stimulate potential ideas for creating and implementing policies and procedures for community mitigation strategies during an outbreak. All simulations took place in conference rooms within the hospitals where participants could sit at a desk in front of a simulation computer. In instances where there were more participants than leadership positions, participants were encouraged to work in small groups and make decisions together.

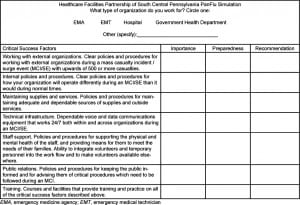

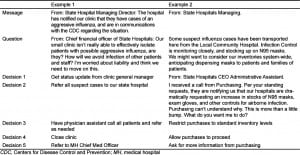

Training goals were identified, discussed, and deliberated throughout the session, and further emphasized in the post-simulation discussion. During this post-simulation discussion, the death toll, surge percentage, and the 7 critical success factors were discussed. Each participant was asked to complete a pre- and post-simulation confidential questionnaire that examined their perspective regarding influenza- response importance and preparedness. A sample survey used in the simulation workshop sessions can be found in Table 2. This questionnaire also assessed the simulation influence on participant awareness of cascading-effects of decision making, and additionally gauged participants’ beliefs surrounding the 7 success factors. The participant provided a self-assessed measure for each of the factors, ranging from low (1), to essential (5). A recommendation section similarly asked participants to rank how quickly they felt their organization should take steps to correct gaps identified during the simulation (within each key factor), ranked on a scale of 1 (deserves immediate attention) to 3 (does not need attention at this time). This study was approved by the local institutional review committee. We performed comparison of before and after simulation perceptions, using a student’s t-test with p<0.05 considered significant.

RESULTS

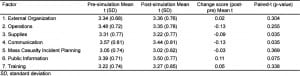

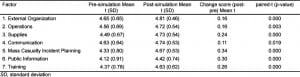

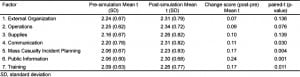

We surveyed 155 hospital personnel both before and after the simulation training, and then compared and analyzed their responses. Of the 42 possible questions, evaluation data were only considered if at least 80% of the questions were completed per survey; this included 133 (86%) participants, and these subjects formed the sample population. Hospital personnel comprised the majority of the people surveyed, at 124 out of 133 (93%). A comparison of the pre-simulation and post-simulation preparedness data is presented in terms of perceived own facility preparedness (Table 3) and personal importance (Table 4) in 7 key categories/factors (external organization, operations, supplies, communication, mass casualty incident planning, public information, and training). While preparedness ratings were stable and not statistically different, there was a trend toward declined preparedness perception in 2 of the 7 key factor areas— supplies and communication, post training. Otherwise stated, after the simulation, they felt their facility is less prepared than originally thought (before the simulation). The authors interpreted this to mean that the articipants realize that they were less prepared to manage supplies and use voice and data communication systems after they had completed the simulation (Table 3). Similarly, pre-simulation and post-simulation total scores for the “importance” subsection (Table 4) revealed that there was a statistical increase in mean ratings in all key factors for successful management of a pandemic influenza outbreak (all p-values <0.05). In the third evaluation domain, recommendations ratings (by trainees), 4 of the 7 factors—communication, mass casualty incident planning, public information, and training (Table 5) achieved statistical significance. Finally, participants reported that their perceptions increased for the critical need to enact changes in each of these areas at their own facilities.

DISCUSSION

We describe the development and initial testing of a computer-based simulation for training in key decision making for a hypothetical pandemic influenza outbreak. We conducted the simulation was conducted using a networked, computer-based system, and developed it based on the premise that instructional facilitators would be able to: (1) administer the same pandemic influenza exercise at many institutions; (2) use the simulation over an extended period of time; and (3) experience different simulation outcomes based on the decisions participants made in their respective training days. Emergency physicians may find use of such training to be valuable in order to ensure uniformity of training, capture the attention of staff and administrative personnel on a challenging topic where community engagement is required for success, and provide a leadership opportunity for facilitators to improve public health preparedness. Individual participants reported real-life feelings of performance pressure and other emotional responses as a result of the realistic visual and auditory inputs.

We contracted with Crisis Simulations International (Portland, Oregon USA) to design and develop a computer-based simulation using their proprietary DXMA™ architecture. In computer-based simulation training, 2 core principles of simulation design differentiate it from others. Just as in real life, decisions do not happen in isolation. Within the simulation, a trainee may be faced with a decision that is triggered by an event occurring in the scenario or by decisions made by other roles/trainees earlier in simulation time. This is referred to as interdependency between roles. A decision made by role 1 leads to downstream effects that then triggers a decision to be made by role 2. This 2-decision series is called a cascading decision, which is used throughout the pandemic influenza simulation.

Based on a response system organized along the National Incident Management System (NIMS) footprint, we incorporated specified 2007 federal planning assumptions relating to highly pathogenic avian influenza into the computer program. Two educational consultants transformed the design document constructed by the SMEs into an educational assessment tool. Personalized facilitation provided with the exercise was designed by educational specialists, using knowledge gained from SMEs in the fields of emergency medicine, emergency preparedness, emergency medical service, hospital administration and infectious disease. This facilitator discussed key points and topics before, during and after this simulation to help mediate discussion and learning objectives.

To increase accuracy of the simulation experience, the SMEs determined 7 critical success factors: (1) Have clear policies and procedures for working with external organizations; (2) have clear internal policies and procedures for operation; (3) maintain adequate and dependable resources and supplies and outside services; (4) have dependable voice and data communication both within and across organizations; (5) support healthcare staff physically and psychologically; and (6) have policies and procedures for keeping the public informed.

Leadership positions that would be involved in such a crisis were designated. The positions were a hospital chief executive officer (CEO), an emergency medical service representative, an emergency management agency worker, an employee of the state health department, a hospital incident commander, and a hospital operations chief. Throughout the simulation duration, each leadership position participant was presented with messages, questions and decision options that would be expected in a real-life response scenario. Table 6 exhibits 2 decisions served up to the CEO.

The simulation provided decisions in a time-based sequence that simulated the first 8 weeks of the influenza outbreak within one community. The DXMA™ architecture that was used was unique in that it was designed to used the interdependent and cascading decisions made by participants in a collapsed timeframe. Thus, consequences of choices made by each leadership role affected the leader themselves, the overall outcome of the exercise, and fellow participants. These consequences were not necessarily linear; second- and third-order consequences resulted from decisions as well.

Audiovisual news reports were displayed as “live” video feeds triggered by participant decisions. The video streams added a sense of realism to the simulation and were broadcast at regular intervals. After the 1-hour simulation session, a community influenza infection monitor, a case mortality estimate, and an infection percentage estimate provided participants further insight into the effects of their decisions. The data for these estimates was taken from 2 influenza surge programs created by the Centers for Disease Control and Prevention (CDC)—FluAid 2.0 and FluSurge 2.0. as determined by the SMEs. These federal flu preparedness models aim to provide hospital administrators and public health officials an estimate of the surge in demand for hospital-based services during a hypothetical influenza pandemic. They take into account the population of a respective location, as well as the number of emergency departments and intensive care unit (ICU) beds. Based on this data input, the number of infected patients requiring hospitalization is estimated, as is the associated mortality estimate.10 As part of the “realistic features” of the simulator, it presents environmental disturbances systematically. During the central point of the simulation, participants received messages, questions, “live” video feeds and infection monitor updates at a pre-determined rate. This added to the fidelity and interactive nature of the simulation.

This simulation was intended to model a complex system, give participants the understanding of the inter-relationship repercussions of decisions undertaken, and give participants perspective to analyze their own facilitiess’ preparedness. This simulation exercise found that the various types of responses needed to effectively manage a pandemic influenza outbreak were made evident to participants. This simulation workshop session revealed specific observations related to disaster preparedness medicine, such as, participants were less prepared than they originally thought prior to participating in the simulation. Foremost, however, is the fact that all 7 key success factors showed a significant increase in their importance ratings, leading to the conclusion that this simulation increased awareness of these issues. Further work is needed to translate knowledge into action, however, if sustainable changes in pandemic influenza readiness are to be realized. Subsequent to completing the simulation training, participants will presumably illustrate an improved comprehension of the means necessary to prepare for a pandemic influenza outbreak, yet this was not the main outcome. A useful training environment would provide the ability for personal growth and understanding to anticipate further organization and practice to decrease the effects of a potentially devastating viral illness. These include many key factors mentioned in the corresponding survey, such as increasing communications between public and crisis management, implementing/modifying triage protocols, and other modalities encountered within the simulation.

The purpose of the simulation workshop experience was to expose hospital personnel to an interactive platform that stimulated critical decision making and increased awareness to the vast amount of responsibilities personnel need, in preparation for, and in response to a pandemic influenza outbreak. Objectives were not to evaluate participant decisions as right or wrong, but rather to discuss the options, and observe how each decision has its own significant outcome on the final result of the surge. In the same respect, this was not a session in which participants were educated, or trained on mass casualty incident response.

LIMITATIONS

We rated each of the 7 factors at baseline and at the completion of the simulation, but no long-term follow-up data has been collected to evaluate sustainability. A secondary contact is needed to address this next step. The participation in a real event is the best way to achieve the greatest amount of experience when compiling information on any educational assessment. As with any other training method, time frames of the educational exposure are reduced to have a realistic and obtainable educational experience. This simulation breaks down an influenza outbreak from weeks to hours; this of course is not ideal, but an accepted practical approach to the time constraints of personnel. Another major limitation in this study stems from the fact that participants’ occupations were not taken into account (whether they were nurses, physicians, unit managers, or administrators) when “playing” each role during the simulation. In future simulations, each participant should perhaps play a function that reflects their respective emergency response position. However, several trainees commented on the positive value of assuming other roles than they would ordinarily occupy, as it contributed to their overall knowledge of mass casualty management. Additionally, although the results of the death toll and surge capacity created were discussed in the facilitation portion of the training day, there is no mathematical model to provide participants scientifically valid feedback as to the consequences accrued from their decisions. While a test of regional personnel may be unsatisfactory in some respects, beta versions of this simulation were played with SMEs, including those outside the development group. We have found that educational translation is perceived by many as better when the SME is asked to play a role within the simulation outside their normal domain. Many have indicated it provided them insight into what others are/may be doing which could be in conflict with decisions they are making during an incident.

CONCLUSION

Development and testing of a computer-based, high-fidelity simulation trainer for a pandemic influenza outbreak can provide a platform for improved perceptions, importance, and recommendations for progress. Reproducibility and the inter-connectedness of decisions can be highlighted within the simulation training, which produces a full-scale weeks-long incident in a few hours. For emergency providers and trainers, this simulation is highly reproducible and can be facilitated to small and larger audiences.

Acknowledgments

Supported through funding made possible by the United States Department of Health and Human Services, Office of Preparedness and Emergency Operations, Division of National Healthcare Preparedness Programs (Grant No. HFPEP070002-01-01). We would also like to thank Dennis Damore and Crisis Simulations International, LLC (CSI) with their assistance in the development of the high-fidelity pandemic influenza simulation program. Acknowledgments also go to the Healthcare Facilities Partnership of South Central Pennsylvania for their participation in this program, and Alicia Shields and Carla Perry who supported the data collection portion of the study.

Footnotes

Supervising Section Editor: Sanjay Arora, MD

Submission history: Submitted August 28, 2011; Revisions received January 12, 2012; Accepted April 16, 2012

Full text available through open access at http://escholarship.org/uc/uciem_westjem

DOI: 10.5811/westjem.2012.3.6882

Address for Correspondence: James M. Leaming, MD. Penn State University College of Medicine, 500 University Drive,

Hershey, PA 17033. Email: jleaming@hmc.psu.edu.

Conflicts of Interest: By the WestJEM article submission agreement, all authors are required to disclose all affiliations, funding sources, and financial or management relationships that could be perceived as potential sources of bias. The authors disclosed none.

REFERENCES

1. Lazzari S, Stohr K. Avian influenza and influenza pandemics. Bull World Health Organ.2004;82:242. [PMC free article] [PubMed]

2. H5N1 avian influenza: timeline of major events. Global alert and response page. World Health Organization Web site. Available at:http://www.who.int/csr/disease/avian_influenza/H5N1_avian_influenza_update.pdf. Accessed July 7, 2011.

3. Influenza at the human-animal interface summary and assessment of April 2011 events. Global alert and response page. World Health Organization. Available at:http://www.who.int/csr/disease/avian_influenza/Influenza_Summary_IRA_HA_interface.pdf. Accessed July 7, 2011.

4. Avian influenza (“bird flu”) fact sheet. Epidemic and pandemic alert and response page. World Health Organization. Available at:http://www.who.int/mediacentre/factsheets/avian_influenza/en/index.html. Accessed July 7, 2011.

5. Center for Disease Control; Updated CDC estimates of 2009 H1N1 Infuenza cases, hospitalizations and deaths in the United States, April 2009 – April 10, 2010. Web site. Available at:http://www.cdc.gov/h1n1flu/estimates_2009_h1n1.htm. Accessed July 7, 2011.

6. Gillett B, Peckler B, Sinert R. Simulation in a disaster drill: comparison of high-fidelity simulators versus trained actors. Acad Emer Med. 2008;15:1144–1151. et al. [PubMed]

7. Kobayashi L, Shapiro MJ, Suner S. Disaster medicine: the potential role of high-fidelity medical simulation for mass casualty incident training. Med Health. 2003;86:196–200. et al. [PubMed]

8. Beaubien JM, Baker DP. The use of simulation for training teamwork skills in health care: how low can you go? Qual Saf Health Care. 2004;13:51–56. [PMC free article] [PubMed]

9. Havighurst L, Fields L, Fields C. High vs low fidelity simulations: does the type of format affect candidates’ performance or perceptions? Conference presentations, IPMAAC website. Available at:http://www.ipmaac.org/conf/03/havighurst.pdf. Accessed June 4, 2008.

10. Beaton RD, Johnson LC. Instrument development and evaluation of domestic preparedness training for first responders. Prehosp Disaster Med. 2002;17:119–125. [PubMed]